Table of contents:

SECTION D – PART

III - TECHNICAL CAPABILITIES AND PLAN

D15.1 Detailed description of the registry operator's technical

capabilities.

Internet experience and database operations

Data protection and Intellectual Property rights

Access to system development tools

D15.2.1 General description of proposed facilities and

systems

Deployment diagrams and system realization

In-house

registrar service component

Physical diagrams and structures

General information about the hardware

Entry point for Accredited Registrars

External data-centers for DNS and WHOIS

Database description and structure

Location, connectivity and environment descriptions

D15.2.2 Registry-Registrar Protocol and interface

A new,

Stateless Registry-Registrar Protocol

Attribute/value

set definitions

Mapping from SRRP to the RRP as defined in RFC 2832

Mapping

multiple RRP-commands on to one SRRP-command

Handling

name server clusters through RRP

Handling

unsupported RRP commands

Database software, hardware and performance

Domain transfers in the database

D15.2.5 Zone file distribution

Software diversification on DNS

D15.2.6 Billing and collection systems

D15.2.7 Data escrow and backup

Software and hardware security

Software and Hardware Encryption

Intrusion Detection System(IDS) and Intrusion Response

Team(IRT)

Physical security of the facilities

D15.2.12. System outage prevention

D15.2.13 System recovery procedures

Fast recovery in case of single server failure

Recovery in case of full or partial data center

destruction

D15.2.14. Technical and other support.

Table of Figures:

Figure 1: Use case top level

diagram

Figure 2: Detailed view of

"DomainHandling" use case

Figure 3:Activity diagram realizing

the "InsertNewDomain" use case, activity view

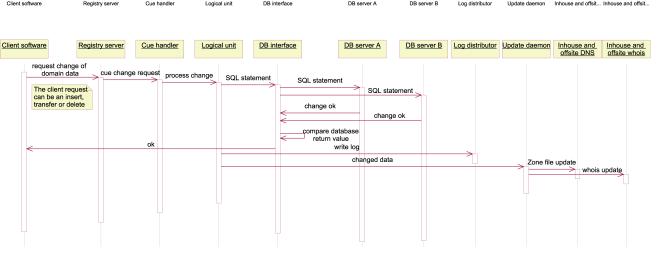

Figure 4:Sequence diagram realizing

the "InsertNewDomain" use case, control view

Figure 5:Activity diagram realizing

the "UpdateDomain" use case, activity view

Figure 6:Sequence diagram realizing

the " UpdateDomain " use case, control view

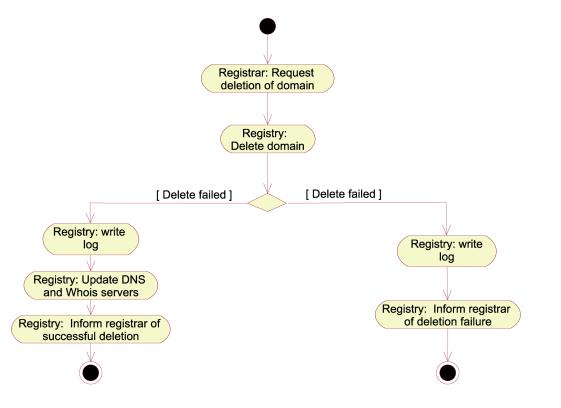

Figure 7:Activity diagram realizing

the "DeleteDomain" use case, activity view

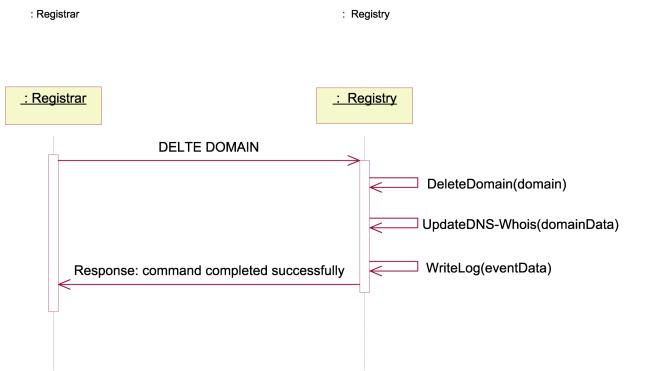

Figure 8:Sequence diagram realizing

the " DeleteDomain " use case, control view

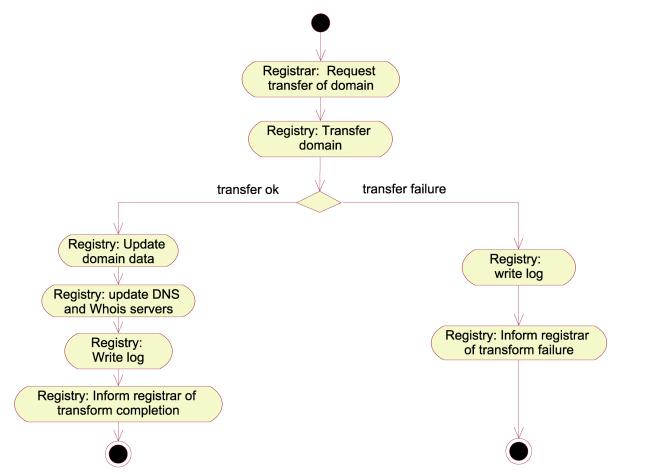

Figure 9:Activity diagram realizing

the "TransferDomain" use case, activity view

Figure 10:Sequence diagram

realizing the "TransferDomain " use case, control view

Figure 11:Detailed view of

"ApplyForDomain " use case (from figure 1 - main use case diagram

Figure 12:Sequence diagram

realizing the “ApplyForDomain" use case, control view

Figure 13:Detailed view of

"RegistrarAccountAdmin" use case (from figure 1 - main use case

diagram

Figure 14:Package diagram of the

system components

Figure 15: Registrar client

component

Figure 16: Deployment diagram of

the Registrar client component

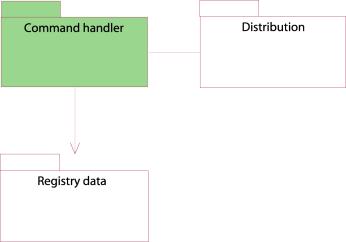

Figure 17: Command handler

component

Figure 18:Deployment diagram of the

command handler component

Figure 19: Distribution component

Figure 20:Deployment diagram of the

distribution component

Figure 21: Registry data component

Figure 22: Deployment diagram of

the registry Registry data component

Figure 24:Deployment diagram of the

registry Registry data component

Figure 26:Deployment diagram of the

Registry data component.

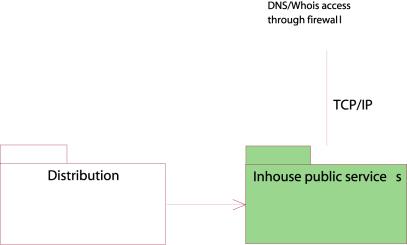

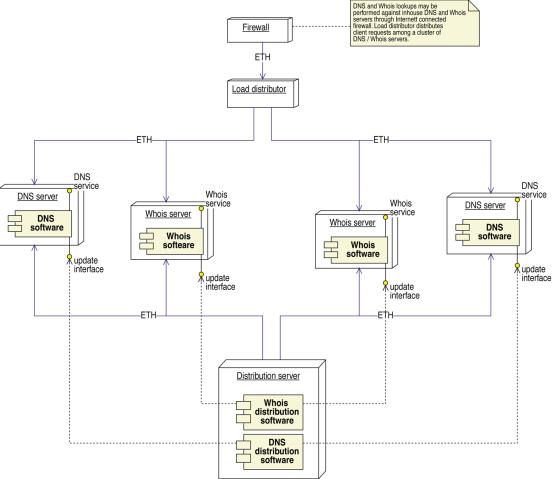

Figure 27: In-house Public services

Figure 28:Deployment diagram of the

in-house public services component

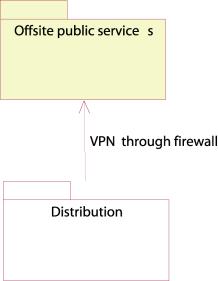

Figure 29: Offsite public services

Figure 30: Deployment diagram of

the offsite public services component

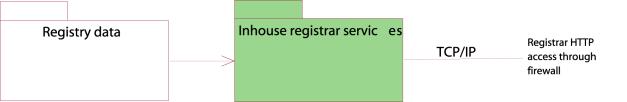

Figure 31: In-house registrar

service component

Figure 32: Deployment diagram of

the in-house registrar Registrar service component

Figure 33: Hardware deployment in

the main data centre

Figure 34: Software high level

structure

Figure 35: Database ER diagram

Figure 50: The ER diagram of the

database

Figure 53:Logical map of the

distribution of the zone files (and WHOIS)

Figure 54: The storage facility

location of Sourcefile

Figure 55: Usage of the WHOIS

system

Figure 56: Distribution of the

WHOIS data

D15.1 Detailed description of the registry operator's technical capabilities.

This should provide a detailed description of the Registry

Operator's technical capabilities, including information about key technical

personnel (qualifications and experience), size of technical workforce, and

access to systems development tools. It should also describe the Registry

Operator's significant past achievements. This description offers the Registry

Operator an opportunity to demonstrate the extent of its technical expertise in

activities relevant to the operation of the proposed Registry

The Global Name Registry (GNR) was established by Nameplanet.com for the purpose applying for and operating a personal delegated TLD. It is established as a completely separate entity to ensure that no possibility of conflict of interest could occur. As a consequence, GNR has no current ongoing operations. However, Nameplanet.com has extensive experience in areas relevant to the Registry and therefore a number of key personnel will be transferred to GNR. The following pages detail the experience that these GNR resources will be bringing from Nameplanet.com

Experience to be transferred:

·

Has

successfully acquired 700,000 users after 8 months of operations, who are all

using a personal domain name for a web-mail and web-page solution fully

developed and operated in-house.

·

Currently

operating an IBM 2.3 Terabyte ESS backend system, and a high availability

active-active failover front-end system

·

Real-time

encrypts private information.

·

The

current system is handling more than 10,000 registrations day, with thousands

of simultaneous users.

·

Extensive

experience with backup, which is taken daily and transported offsite.

·

Extensive

experience with running DNS, since the company is handling the full DNS service

for the personal domains of all 700,000 users.

·

Has

entered into strategic technical partnership with IBM and minimized variations

in equipment used.

·

Has

ensured 99.8% uptime since service was launched February 1.

DNS experience

Nameplanet.com has as its core proposition to end users the free usage of a domain name that corresponds to the user’s personal name for email and web page purposes. To be able to offer this service, Nameplanet.com has acquired extensive statistics about the most common last names in the world, for each of the countries in which the service is launched. A large number of domain names identical to the most common last names have then been purchased on different TLDs to be shared among different users, each using it for free. The result is that domain names are being shared among people with equal interests in it, i.e. Ken Williams and Sven Williams can both use the domain name williams.TLD for their personal purpose, instead of it being held by one user only.

To keep track of all domain names covering the last names of roughly 210 million people in the US only, as well as the DNS functionality of hundreds of thousands of domain names, Nameplanet.com has developed a custom database for administering the DNS servers, renewals, MX records etc. on the massive amount of domain names in use by currently more than 700,000 users, growing at 5% a week. This database makes Nameplanet.com confident that all possible actions are taken to ensure a stable operation of the domain names that the end users rely on. Large efforts have been deployed to ensure that all DNS updates, maintenance and transfers of data to DNS servers are done securely and without loss of functionality of the vital DNS servers.

Nameplanet.com has through its operations in the DNS space accumulated knowledge and contacts within the arena, both through commercial relationships with several Registrars, ccTLD managers, DNSO and ICANN. Participation at the ICANN Board meetings have given insight into the policies and operations of the DNS community and valuable experience.

Internet experience and database operations

The users of Nameplanet.com register online and immediately get assigned an address of the type firstname@lastname.com and a web page www.firstname.lastname.com, or other TLDs where the .com-version has not been available. Nameplanet.com has developed fully in-house a custom object-oriented database for the webmail users, and has ensured 99.8% uptime since launch in Februray 2000. This custom database currently serves thousands of simultaneous users, and has done tens of thousands of email account and web page account registrations per day. The high-performance web-servers and storage solutions scale to millions of users, and are handling increasing data volumes.

Data protection and Intellectual Property rights

By operating a web mail solution for 700,000 people, Nameplanet.com has taken very strong precautions in order to deal properly with the user private data, both technically and legally, in terms of storage, encryption, backup, protection and Intellectual Property rights in various jurisdictions.

Nameplanet.com has strong expertise of Intellectual Property rights with regards to domain names, as it has thoroughly investigated the risk of the business of sharing a domain name between people with the same last name. This expertise also applies to the UDRP as well as national Intellectual Property law of the US and major European countries.

Access to system development tools

The tech team has access to the system development tools needed for implementing high end web based applications. Most of the systems at Nameplanet.com are written in object oriented C++ in a way that makes them highly portable across platforms.

The code is close to being POSIX.1 compliant, and should compile with no or small modifications on any POSIX.1 supporting platform with an ANSI C++ compiler. Only minor modifications would be required in a few low level modules to support non-POSIX.1 compliant systems, provided an ANSI C++ compliant compiler is available. The GNU/FSF tools automake and autoconf are used to provide solid, well tested configuration management for multiple platforms.

As backend database solutions, Nameplanet.com uses Oracle for systems requiring a high degree of updates, CDB for system requiring fast hash lookups, and an object oriented database built in-house for storing XML fragments distributed in (possibly heterogenous) clusters.

Nameplanet.com has spent considerable resources on building a web framework that can scale through distributed operation, caching and mirrors of critical content. The systems are well tested, as parts of Nameplanet.com’s current webmail service, and automated testing suites are in development for the core functionality.

D15.2.1 General description of proposed facilities and systems

General description of proposed facilities and systems.

Address all locations of systems. Provide diagrams of all of the systems

operating at each location. Address the specific types of systems being used,

their capacity, and their interoperability, general availability, and level of

security. Describe in detail buildings, hardware, software systems,

environmental equipment, Internet connectivity, etc.

This chapter presents goes through the systems and technical facilities that constitute the Registry, from use-case modelling and sequence diagrams, to deployment diagrams and hardware structure. The chapter is meant to be comprehensive, although some parts of the system are more detailed in subsequent chapters.

High-level system description

Figure 1: Use case top level diagram

The core functionality of the system is centred around the Registry. The Registry is responsible for administrating one or more top level domains (e.g. .name). All main use cases are in some way involved in the handling of these top-level domains. In the following we will give a brief description of all the main actors and use cases identified in figure 1.

Actors:

|

|

Registry: This is the organization responsible for administrating one or more top-level domains. By organization we mean the technical as well as organizational infrastructure built to support this business. |

|

|

RegistrarClient: A RegistrarClient is an organization acting as a domain buyer on behalf of a Client. The RegistrarClient is responsible for the technical maintenance of domains bought form a Registry. Only RegistrarClients may buy, edit or delete domains from a Registry. |

|

|

Client: The Client is the actual buyer of a domain. The client has no direct contact with the Registry. All actions concerning domains are directed towards a RegistrarClient. |

|

|

Accountant: The Accountant is responsible for the financial aspects of domain trading. This involves billing the RegistrarClients for the domains bought, validating their credibility, adding credit upon request and additional payments, and interacting in other financial matters with the RegistrarClients. |

|

|

ICANN: The Internet Corporation for Assigned Names and Numbers (ICANN) is a non-profit, private-sector corporation. ICANN is dedicated to preserving the operational stability of the Internet. ICANN coordinates the stable operation of the Internet's root server system. |

|

|

WIPO: The World Intellectual Property Organisation will be involved in arbitration between conflicting domain name Registrants under the UDRP. |

|

|

ExternalActor: By ExternalActor we mean organizations that should receive reports from the Registry, other than ICANN or WIPO. |

|

|

SecurityFirm: The SecurityFirm is responsible for transporting and handling backup-tapes that are to be transported off-site. |

|

|

EscrowStorage: Escrow is a holding of critical data by a trusted third party, the EscrowStorage firm. The data is held to be transferred to an entity external to the Registry in case of special events. The EscrowStorage firm is responsible for storing and protecting the escrowed data and will release the data to the relevant party upon triggering of the predetermined conditions. |

|

|

DNS-system: DNS-system is the authoritative name server for the top level domain(s) administrated by the Registry. |

|

|

WhoisSystem: It is a service providing information on registered domains handled by the Registry. |

Use cases

DomainHandling

This use case is an abstraction of all operations resulting from interaction between the Registry and a RegistrarClient concerning a domain. DomainHandling is further refined in the following use case diagram:

Figure 2: Detailed view of "DomainHandling" use case

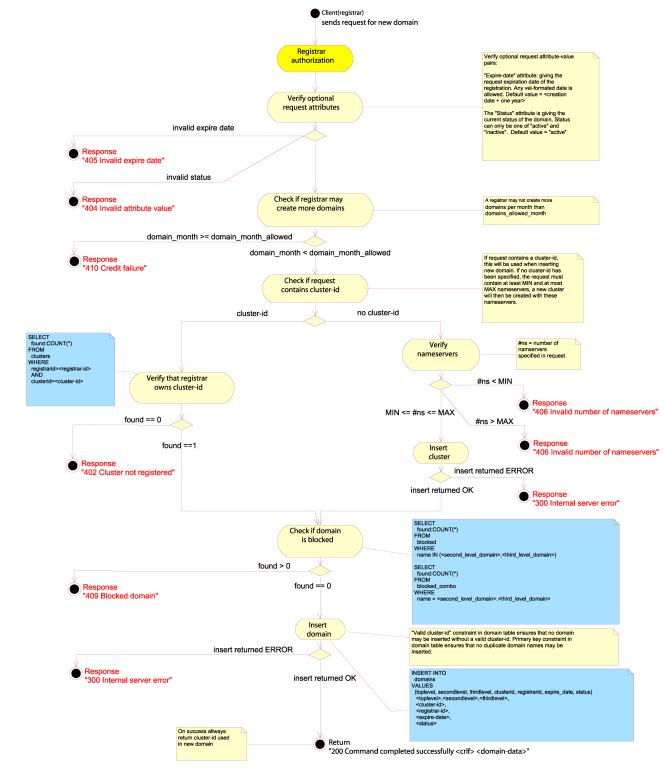

InsertNewDomain

This use case describes the process of domain registration. It involves a RegistrarClient who seeks to buy the domain in question from the Registrar who is handling that domain. The typical activities involved in this process are visualized in figure X3. Figure X4 gives a high-level description of the control-flow when registering new domains.

Figure 3:Activity diagram realizing the "InsertNewDomain" use case, activity view

Figure 4:Sequence diagram realizing the "InsertNewDomain" use case, control view

The

RegistrarClient will be charged an annual amount per domain. If a

RegistrarClient does not pay this domain fee, the domain is deleted and may be

sold to other RegistrarClients. In this case, the domain is not handled by

additional security mechanisms to avoid domains being deleted too early.

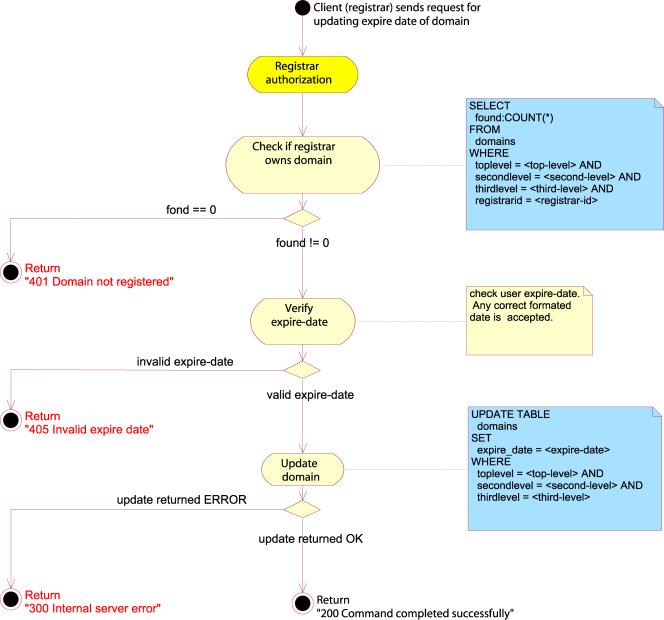

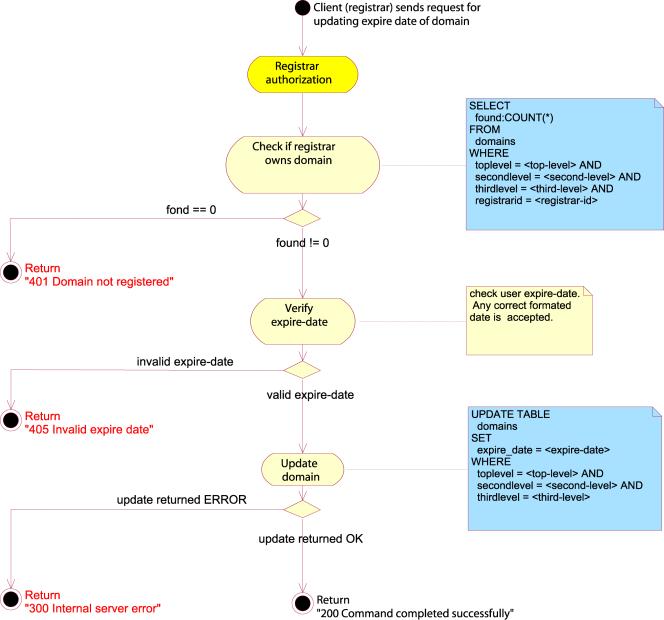

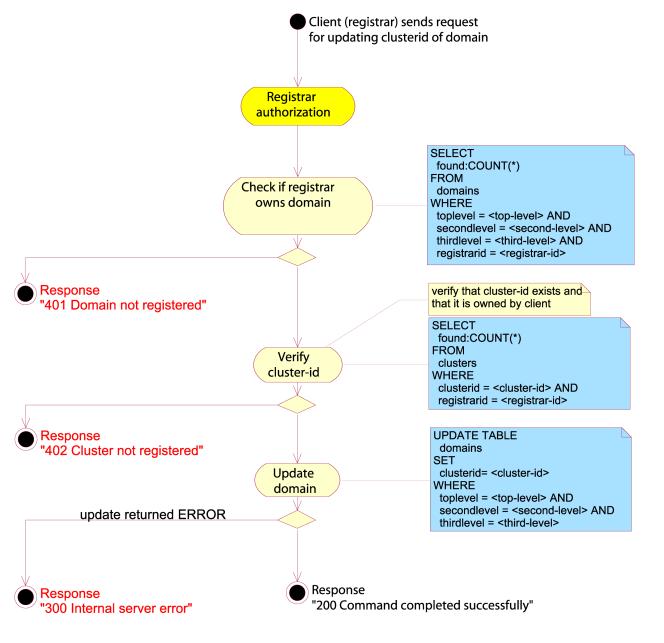

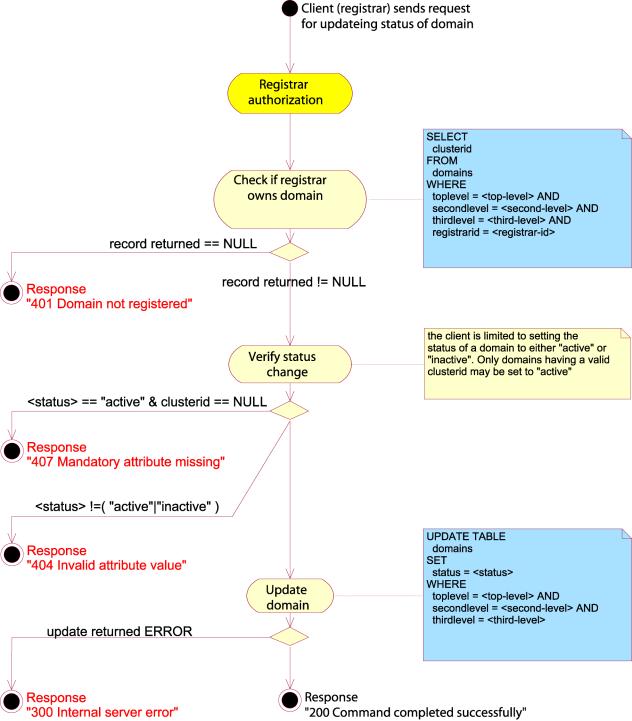

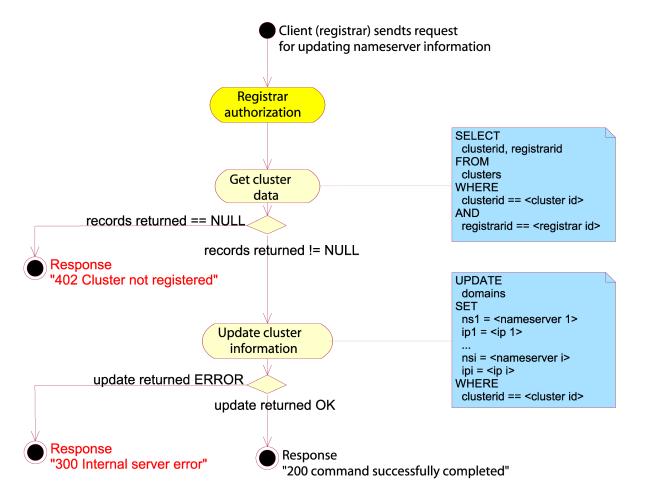

UpdateDomainData

A Registrar may change information about a domain, such as status, expiration date or name servers handling that domain. Only RegistrarClients may update any domain information, and they may only access and update domains they already own. The typical activities involved in this process are visualized in figure 5. Figure 6 gives a high-level description of the control-flow when registering new domains.

Figure 5:Activity diagram realizing the

"UpdateDomain" use case, activity view

Figure 6:Sequence diagram realizing the "

UpdateDomain " use case, control view

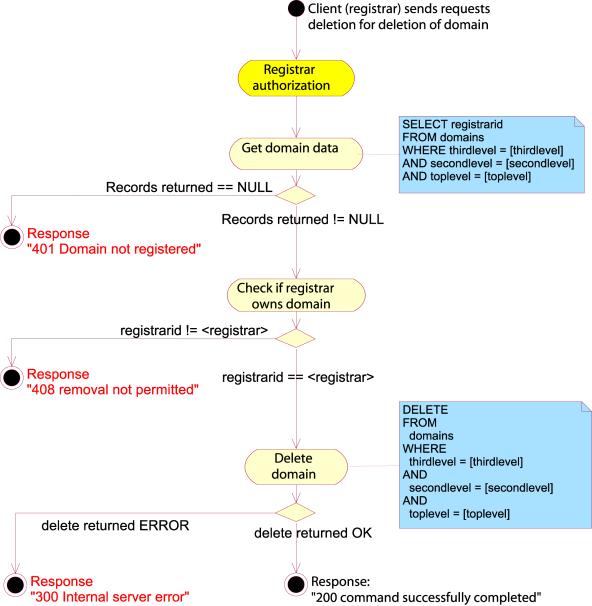

DeleteDomain

A Registrar may delete a domain if he/she owns it. The typical activities involved in this process are visualized in the following figure.. Figure 8 gives a high-level description of the control-flow when deleting domains.

Figure 7:Activity diagram realizing the

"DeleteDomain" use case, activity view

Figure 8:Sequence diagram realizing the "

DeleteDomain " use case, control view

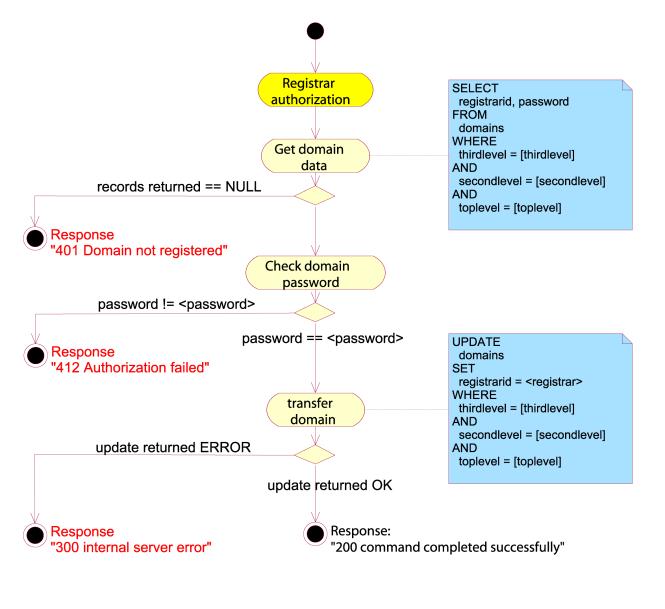

TransferDomain

A domain may be transferred between two RegistrarClients. This may only happen if the Client owning the domain gives the new RegistrarClient permission to take over the domain in question, or in the case of UDRP arbitration or otherwise conflict handling/arbitration by the Registry. Safe transfers are ensured by assigning each domain a transfer password which only the Client owning the domain and the RegistrarClient administrating the domain know. When the Client wishes to move the domain to an other RegistrarClient(B), he informs the new RegistrarClient(B) of his transfer-password. The transfer-password may be requested to be sent to the contact details of the Client as registered in the WHOIS. The Registry will send out this information upon request. RegistrarClient(B) may then use this password to access the domain and issue a transfer request. The typical activities involved in this process are visualized in figure 9. Figure 10 gives a high-level description of the control-flow when transferring domains.

Figure 9:Activity diagram realizing the

"TransferDomain" use case, activity view

.

Figure 10:Sequence diagram realizing the

"TransferDomain " use case, control view

AdminBlockedDomains

The Registry maintains a list of domain names not allowed for registration, according to the Registry policy and current rules. Registrars are not allowed to register domain names that are included in this list. Only the Registry may edit this list.

ApplyForDomain

This use case is an abstraction of the process a Client undertakes when applying for a domain. This process is further refined in the following use case diagram:

Figure 11:Detailed view of "ApplyForDomain " use case (from figure 1 - main use case diagram

The Client (and RegistryClient) may use the WHOIS service provided by the Registry to check if a domain name is taken or not. The only way a client may apply for a domain is through a RegistrarClient. The only situation a Client will be in direct contact with a Registry is in case of domain name dispute (see use case Complaint).

Figure 12:Sequence diagram realizing the “ApplyForDomain" use case, control view

Billing

The prime principle of registrations is that the domain name registrations are prepaid, either in the form of a credit limit, or an actual prepayment of a certain size. This can be combined with an insurance bond from the Registrar, like today’s 100,000 USD requested of the ICANN Accredited Registrars.

The billing and collection systems need not be complicated since the Registry’s billing relations are with the Registrars alone.

Billing process for domain name registration:

· Request registration (Password acknowledged)

· Credit check in database

· Insert domain name

· Acknowledge registration

When a registration is completed, the Registrar’s account balance is debited with the price for one registration. Registrations continue as long as the balance is positive, or until a credit limit, if any, is exceeded.

Once a month, the Registrar is billed with the amount necessary to restore their credit balance. This amount can be chosen by the Registrar himself and can vary from month to month.

Billing at the end of the month:

· Accountant generates a list of all Registrars, with the number of domain names registered during the corresponding month. For each Registrar, a list of every domain registered for this billing period will be included.

· The data goes into the billing system delivered from SAGE, and invoices are generated, printed out and sent by email.

The Registrar can access the billing information and account status through the secure WWW interface, and request the information needed. The support team, key account manager and accountants are also available as telephone support for this purpose, should extra information be needed.

RegistrarAccountAdmin

This use case involves all administration of the individual RegistrarClient accounts. Standard operations as shown in figure 13 are supported.

Figure 13:Detailed view of "RegistrarAccountAdmin" use case (from figure 1 - main use case diagram

Only ICANN accredited RegistrarClients may be given an account. The basic policy of the Registry will be to foster the expansion of the personal domain market through a network of ICANN accredited Registrars. All existing ICANN accredited Registrars will be invited to also become .NAME Registrars. There will be no restriction to the number of Registrars.

Registrars will have a running relationship to the

Registry mostly for billing and credit purposes. Credit for registrations can

be updated at any time.

Reporting

It can be anticipated that the Registry will have a reporting responsibility to entities other than ICANN. These relationships will develop over time.

Complaints

Complaints may

arise either from denial of registration, as would be the case in the event where

a Registrant tries to register a prohibited string. Conflicts and complaints

may also come up as disputes between Registrants.

The Registry will not perform arbitration of disputes. UDRP disputes and other arbitration between Registrants in general will be handled by a third party, e.g. WIPO.

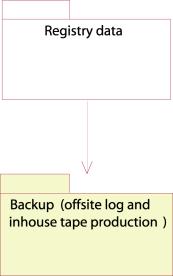

Backup

Backup are taken on a periodically basis and transported offsite by a security company.

Escrow

Approximately once a month a copy of the whole database handling domains and RegistrarClients will be sent to secure storage place offsite. The data will be sent over the Internet, encrypted with an asymmetric RSA encryption algorithm. The keys will be changed at regular intervals. Only ICANN and the Registry may access these copies.

DNSUpdate

The DNS servers will all be continuously updated from the Update Server, which is running as stealth primary DNS. We aim to use BIND9, developed by ISC, which will support the DNS extensions necessary to allow Incremental Zone Transfers (IXFR). Our update server will run a stealth primary DNS server, which will be dedicated to doing updates to our DNS servers with the AXFR and/or IXFR mechanisms of DNS. This server will not be visible to the rest of the world, and it will be connected to our external DNS servers with hardware encrypted VPN cards, to the internal DNS servers using the internal network. This is to ensure that the data arrives at the intended destination without tampering or sniffing on the way. TSIG will also be employed to verify that the data transmitted comes from the correct source and arrives at the correct destination.

Whoisupdate

All the internal and offsite WHOIS servers will run an update server application that will only be accessible from the internal network (separate network cards) in the case of the servers located in the main datacenter, and only through the hardware encrypted VPN cards in the case of the external WHOIS servers. This server application will nonetheless be made as simple and secure as possible. The chroot() system call will be used to restrict the application to a directory, so hackers will not be able to gain access to the system configuration if they get in. The server application will run as a user with low permissions.

To update information on the WHOIS server, client software on the update server will connect to the update server application on the WHOIS server. A typical transfer will look like this:

set alexander.smith.name\n

Domain Name: alexander.smith.name\n

Registrar: The Internet Registrar Company Inc.\n

Registrar Whois: whois.intreg.com\n

Registrar

URL: www.intreg.com\n

Nameserver: ns1.dnshost.com\n

Nameserver: ns2.dnshost.com\n

Modified: 2001-02-17\n

.\n

The update server application on the WHOIS server will read this information, and when it receives a single line consisting of a period only, it will return a confirmation to the client and immediately disconnect.

The first line received will specify what to do and the name of the domain. Two (2) commands will be allowed, “set” and “delete”. If the command is “set”, the update server application on the WHOIS server will read the complete information on the next lines. With the directory hierarchy structure proposed above, the application will know where to place the file containing the information. If the command is “delete”, the WHOIS information for this domain will be deleted.

While the information is being received, it is written to a temporary file on the same filesystem as the resulting file will be placed on. When the transfer is completed, this temporary file will be renamed to the correct name, in this case name/smith/alexander. This will ensure that partial files will never be sent if someone queries a domain while it is being updated, regardless of whether it is the first time the WHOIS information exists for this domain or whether the information is being updated.

This continuous update scheme ensures that the WHOIS data is as “updated” as possible, and that there will be no substantial queues of updates waiting on the update machine. This allows controlled updates even during high-traffic periods and the sunrise period and it also ensures that there will be no massive volume of updates waiting at any time.

The only exception to this would be if the update machine goes down, in which case it would rapidly be replaced with a spare machine in stock, and booted from the ESS, thus getting access to the same data as it had at the time it went down.

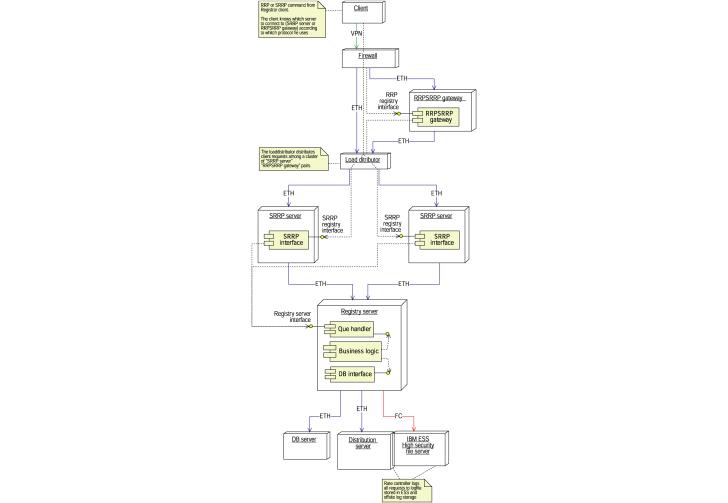

Deployment diagrams and system realization

In order to give a good understanding on how the system is to be deployed, we here give a short explanation of the main issues about the hardware structure. Each deployment diagram refers to a package in the package diagram. The diagrams consist of three main elements: nodes, processes and interfaces.

Figure 14:Package diagram of the system components

Registrar client component

Figure 15: Registrar client component

Figure 16: Deployment diagram of the Registrar client component

The Registrar software communicates via VPN through a firewall. Depending on which protocol the Registrar wishes to use, he chooses to connect to either the RRP or SRRP interface. The software on the client will be open sourced, and the clients may therefore create their own software if they wish to support some special needs.

Security

The Registrars and the Registry communicate with hardware encrypted VPN cards, to prevent eavesdropping and tampering by third parties. Each command from the Registrars has to contain the Registrar’s password, which is verified by the Registry before any command is executed.

Recovery

In the event that an SRRP server has a system outage, there are others in the high availability active/active system configuration that can take over from it. If a RRP-SRRP gateway has a system outage, it can be replaced quickly. There can be several RRP-SRRP gateways, which will be addressed by the same name in the DNS, and will be connected to in a round robin fashion. These gateways will have standard configurations and can be easily replaced.

Scalability:

The high availability active/active system configuration

allows for quick and easy expansion of the number of SRRP servers that handle

requests, transparently to the Registrars. RRP-SRRP gateways can be added by

adding their IP address to the DNS name, also transparently to the Registrars.

Command handler component

Figure 17: Command handler component

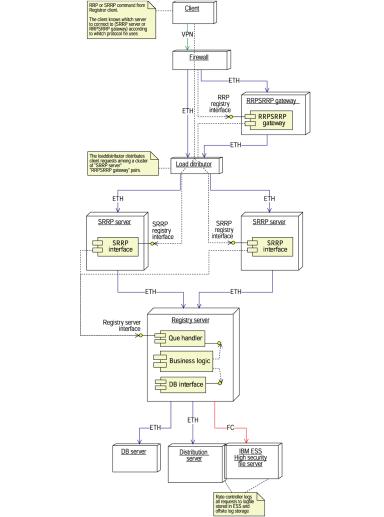

Figure 18:Deployment diagram of the command handler component

The command handler component consists of three elements:

· RRP-SRRP gateway

· SRRP server

· Registry server

The Registry is divided further into the following components:

· Queue handler

· Business logic

· Database interface

The SRRP server receives and queues connections from the Registrar clients. The connections will be held until the command has been processed. If the client uses the RRP protocol, he must connect to the RRP-SRRP gateway. The ERRP server will be able to buffer client connections to handle traffic peaks. The capacity of the front end of the system can easily be enlarged to handle increased load.

The Registry server is responsible for serving the client requests. Internally it is divided into three components:

· The Queue handler will queue commands issued by the SRRP server according to Registrar-id – one queue for each Registrar. The queue will be served in a round robin fashion. The Queue handler will not send more commands to the business logic than the business logic can handle.

· The business logic’s responsibility is to translate the commands into a database statement, and perform other necessary operations before the SQL commands are sent to the database interface.

· The database interface provides an interface towards the business logic that hides the complexity of the database calls. Therefore, the business logic does not see how many databases there are, but only whether the transaction is completed or not. This process runs a double answer protocol: each transaction is run on both databases, and the database interface controls that both databases give the same answer. In case of different database responses, the system stops the registration of database changes, as an error has been discovered.

Security

The firewall prevents hacking into and tampering with data in the system, and changing of system configurations. The VPN cards prevent eavesdropping and tampering with data between the client and the firewall.

Recovery

The SRRP servers are easily replaced with new computers with the same configuration in case of a fatal error. The same applies to the RRP-SRRP gateways.

Scalability

The high availability active/active system configuration allows for quick and easy expansion of the number of SRRP servers that handle requests, transparently to the Registrars. RRP-SRRP gateways can be added by adding their IP address to the DNS name, also transparent to the Registrars.

Another Registry server can be added to the system,

connecting each to half of the SRRP servers. More Registry servers can be added

in the same way. The databases can be scaled by using parallel systems.

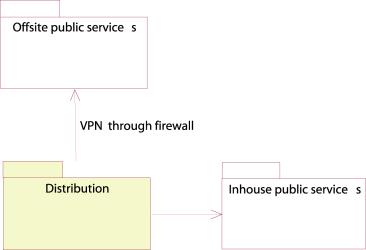

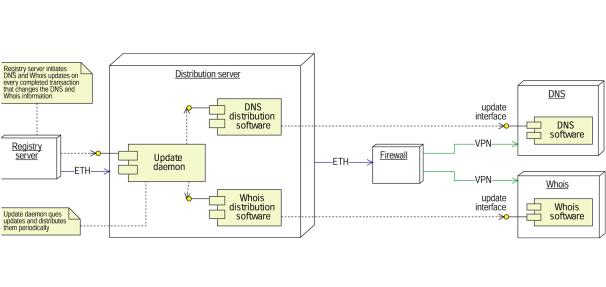

Distribution

component

Figure 19: Distribution component

Figure 20:Deployment diagram of the distribution component

The distribution component’s responsibilities are to distribute the changes that have taken place to the local as well as the offsite DNS and WHOIS services. The command handler communicates the changes in the database that have been successfully completed to the distribution component. Here, the update daemon receives the changes. It processes the data and produces an update message to the WHOIS and zonefile. The changes are therefore reflected to the WHOIS and DNS servers immediately. The distribution server will be set up as a primary stealth server to accomplish the DNS updates.

The offsite WHOIS /DNS servers are connected to the system by VPN channels in order to maintain a secure transmission of WHOIS and zone file updates.

Security

Security is assured by the use of a firewall and dedicated VPN cards.

Hardware failure strategy

Since the distribution server is booted from the ESS, it is easy to replace the distribution server. Backup hardware will always be maintained to take over in case of fatal hardware errors on the distribution server.

Scalability

The distribution server can be split into separate WHOIS and DNS update machines, and in addition, can be split into machines dedicated for updating groups of WHOIS and DNS servers.

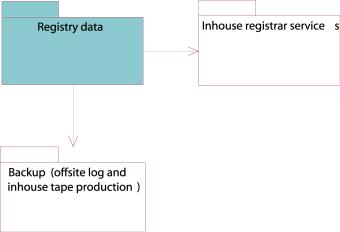

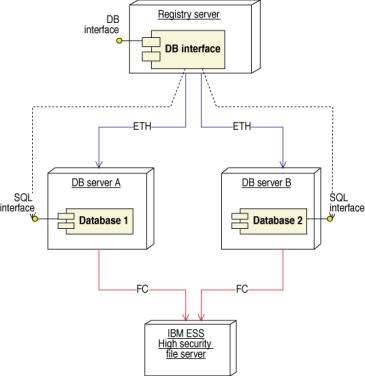

Registry

data

Figure 21: Registry data component

Figure 22: Deployment diagram of the registry Registry data component

The command handler’s database interface communicates with the two mirrored databases through their SQL interfaces. Each database is connected to the ESS, which provides secure data storage. Both DB servers are stored on separate disks in the ESS, which is also internally mirrored.

The Registry data is periodically sent to the Escrow agent which will hold them in escrow according to the agreement.

Security

This is purely an in-house system, and will not be accessible to others.

Recovery

Hardware failure strategy:

If one of the two databases stops, the remaining database will still serve the read requests from the inhouse public services and the billing system, but changes in the data cannot be made until both databases are running. In case of a total breakdown of both databases, the backup tapes or the offsite log will be used to recreate the database. Extra hardware will always be ready to replace a broken unit.

Scalability:

Parallel versions of the databases can be installed. The ESS is scalable. The Registry server is scalable, as explained above.

Billing

component

Figure 23: Billing component

Figure 24:Deployment diagram of the registry Registry data component

The billing component is a simple database system that reads and edits Registrar data from the tables. The only access this database has to the system is to read and edit information relevant to the billing task.

Security:

This is purely an in-house system, and will not be accessible to others.

Recovery:

Hardware failure strategy:

Since the database can easily be installed on another computer, it is easy to replace the node on which the billing system resides. Since the data are stored on the ESS, the entire database system has to break down before the billing system will be affected.

Scalability:

Billing is a manual job run once a month, and does not need capacities for scaling beyond that of the database, which is described above.

Backup

component

Figure 25: Backup component

Figure 26:Deployment diagram of the Registry data component.

.

The backup component consists of both offsite log writing and backup tape producing. The log will be written through the distribution server to an offsite log storage. The log messages are encrypted and transmitted through a VPN channel from the distribution server to the log storage.

The backups are controlled from the backup controller, where a daemon controls the periodically production of backup tapes.

Security

The backup is secured with a firewall and VPN so that data is not compromised, hacking is prevented.

Recovery

Hardware failure strategy

New tapes can be easily acquired and a new backup robot will be acquired as part of the service level agreement with the supplier.

Scalability

Additional backup solutions can be added in case of high volume.

In-house

public services:

Figure 27: In-house Public services

Figure 28:Deployment diagram of the in-house public services component

The in-house public services offer the outside world a DNS and WHOIS service. The service consists of a high-availability active/active configuration, which can easily be expanded. The load distributor spreads the load onto the different DNS/WHOIS servers.

The distribution server transmits new zone files and WHOIS information immediately when the change is received from the command handler. The distribution server also contains complete WHOIS and zone file so that associated DNS’s and WHOIS servers can be restored completely from the distribution server.

Security

The firewall secures the in-house public services from hacking.

Recovery

Hardware failure strategy:

The high availability active/active configuration makes it easy to maintain the service in a hardware failure situation. The distribution server can be easily replaced in case of a hardware failure, allowing for updates again.

Scalability

The high availability active/active configuration allows for easy scalability by adding more components.

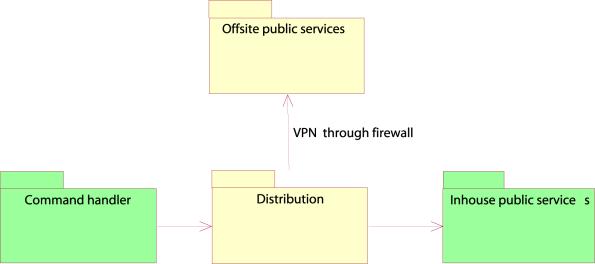

Offsite

public services

Figure 29: Offsite public services

Figure 30: Deployment diagram of the offsite public services component

.

The distribution server also passes changes immediately to the offsite DNS/WHOIS servers through a VPN channel.

Security

Security is assured with the VPN cards and the firewall,

so tampering and eavesdropping do not occur.

Recovery

Hardware failure strategy:

Since there exists multiple offsite DNS and WHOIS servers, these services will always be provided from at least one of them.

Scalability

More DNS and WHOIS servers can be installed at different locations in case of high load.

In-house

registrar service component

Figure 31: In-house registrar service component

Figure 32: Deployment diagram of the in-house registrar Registrar service component

This is the Registrar’s web interface towards the system. The load distributor is equipped with a high availability active/active system, which detects if one of the web servers is down, and redirects the requests to the operating web server(s). The web servers are connected to one of the databases, but switches database connection if the database goes down. Since the system is configured in an active/active fashion, the capacity may be expanded by adding new nodes.

Security

Security is assured with the VPN cards and the firewall,

so tampering and eavesdropping do not occur.

Recovery

Hardware failure strategy

The high availability active/active configuration makes it easy to maintain the service in a hardware failure situation.

Scalability:

The high availability active/active configuration allows for easy scalability by adding more components.

Physical diagrams and structures

Hardware

The following diagram describes the physical structure of the main data center:

Figure 33: Hardware deployment in the main data centre

The Registry has three connections to the Internet, which mainly consist of the following:

1) Secure entry point for Accredited Registrars to register domain names and other operations in the database

2) Interface to the Internet for DNS servers, WHOIS servers and WWW servers

3) Connection to external data centers where secondary DNS servers, supplementary WHOIS servers and log machines are hosted

General information about the hardware

Most of the servers in the main data center are diskless and have disks as a partition on the Enterprise Storage System (ESS). The ESS is a internally mirrored, RAID controlled system which allows all machines to boot directly from it, instead of from a local disk. The great advantage of this is that once a server fails and has to be replaced, it will need no swapping of disks or installations, and can boot directly from the ESS with minimal total downtime.

There will be a stock of pre-configured servers (set up to boot from the ESS) as an immediate replacement for critical servers that fail. The Incident Response Team will change any failed server with one from the stock.

All servers are connected to an operator terminal so all servers can be accessed.

Entry point for Accredited Registrars

The entry point for the Accredited Registrars is on the left side of the above figure, where connection is ensured through the PIX firewall. The Registry wants to encourage the use of a secure channel for all transactions to the database through an encrypted Ipsec VPN channel. The use of such hardware, however, is not mandatory, and the Registrars willing to accept a lower level of security for their interface may use the software options available such as SSL. It is believed that it would be advantageous for all parties to have a higher security on the transactions, since it involves billing, updates to the database and other critical operations. The Registry will provide information compliant hardware to ensure encrypted communication with the ICSA compliant Ipsec VPN cards installed both by the Registrars and the Registry.

The Registrars have two alternative connection points behind the PIX, the RRP server or the SRRP server. The first is running the RRP protocol as defined in RFC2832, the second (SRRP), a state-less protocol which is designed and proposed by the Registry as an alternative to the established RRP, to provide faster registrations and easier implementation for new registrars than what is provided through RFC2832 RRP.

The SRRP servers are running in an high availability active-active system, while the RRP server translates the RRP protocol requests to the SRRP protocol which are sent to the servers. We encourage the Registrars to use the SRRP because of its simplicity and speed.

WWW entry point

The general entry point for Internet users to the DNS servers, the WHOIS servers and the WWW servers of the Registry is located in the middle of the above figure. This entry is unencrypted except for SSL, the PIX firewall will provide standard protection for this commonly accessible interface.

The DNS servers, the WHOIS servers and the WWW servers are set up in a active-active system, controlled by the local director. It is extremely scalable and new servers can be added to any side of the solution to scale for higher load.

External data-centers for DNS and WHOIS

The connection to the external data-centers, some of which are put on different backbones, is located in the right part of the hardware diagram above. The external data-centres are connected to the main data center through a VPN hardware encrypted network, through which updates to the DNS and WHOIS servers are done. The number of external DNS and WHOIS servers is extremely scalable, and new centers can be added if needed. The external WHOIS servers will take load off the internal WHOIS servers in high-traffic periods, and all DNS and WHOIS servers will be updated continuously.

Software structure

The following figure is meant as a high-level summary of the software that is running in the Registry.

Figure 34: Software high level structure

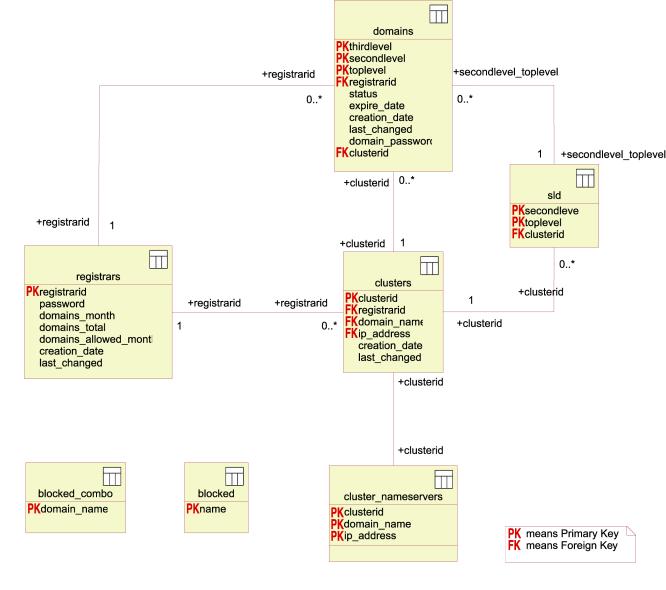

Database description and structure

Figure 35: Database ER diagram

Table Descriptions

Domains

This

table contains all information about the single domains, such as when it

expires, what nameserver-cluster it uses, and what it’s status is. All columns

must be NOT NULL.

SLD

This

table is made to allow distribution of nameservers for domains on the second

level. When the Registry only allows registrations on third level domains, it

is possible to register many more domains than is possible on second level

domains, and when there are a lot of domains registered, this may be necessary.

Also, the introduction of IPv6 will make DNS a much more complex application,

which will again necessitate load distribution. In the beginning, we will for

example start distributing all domains starting with a-m on the second level to

one nameserver cluster and the rest, n-z, on another nameserver cluster. In the

beginning it is not necessary to use this table. All columns must be NOT NULL.

Clusters

The

clusters table contains all the cluster-IDs and the respective nameservers. At

least 2 nameservers must be in a cluster for it to be valid. All columns must

be NOT NULL.

Registrar

This

table contains all the Registrar information needed in the database, including

billing information and the Registrar password. All columns must be NOT NULL.

Cluster_Nameservers

This

table contains all the clusterids with all the belonging domain names and IP

addresses. All columns must be NOT NULL.

Blocked

The

Blocked table contains all the words that are blocked on second and third level

domains. All columns must be NOT NULL.

Blocked_combo

This

table contains all the combinations of third level and second level domains

that are blocked. All columns must be NOT NULL.

Location, connectivity and environment descriptions

Physical Security

A physical security system to prevent unauthorised person(s) from obtaining physical access to the premises or the equipment on site should be in place. As a minimum this should consist of access control equipment, intrusion detection equipment and CCTV surveillance equipment as well as having 24-hour security staff on the premises. It should provide access for authorised personnel 24 hours a day.

Hosting Space

Hosting space is to be available within cages and should provide for either standard 19” racks or to allow the installation of non-standard sized equipment, floor mounted equipment or special/custom racks. The cage space should be flexible in that if required, cages can be joined together to form larger caged areas.

Electrical Power

Each full rack or cabinet is to be provided with 10 Amps, 220-240 Volt AC electrical power, on dual redundant power strips.

Electrical power is to be supplied to the co-location centre through two independent circuits from the local power company. The supply must be conditioned and supported by UPS systems and backup diesel generators. All power systems should be designed with N+1 redundancy to ensure reliable operation.

Air Conditioning

Air conditioning is to be provided in the co-location centre with N+1 redundancy and be capable of maintaining the environmental temperature at 20°C ± 5°C and humidity between 20% and 65% suitable for server equipment.

Environmental Monitoring

The physical environment is monitored 24 hours a day. These systems check a full range of environmental factors including, temperature, humidity, power, security systems, water detection etc. If any system is found to be operating outside normal working parameters, the on-site staff will investigate and arrange for the appropriate service or maintenance work to be carried out.

Fire Suppression

A fully automatic fire detection and alarm system that is linked to automatic suppression systems is required, with the suppression system based on Argonite (or similar).

Facilities

It would be preferred if within the location there are meeting room spaces available. Additionally, there should be available a loading and staging area where equipment deliveries can be accepted 24 hrs a day into a secure area.

Burstable

Bandwidth

The available bandwidth should provide for a dedicated 100Mbps burstable bandwidth on a dedicated Ethernet port direct to the cage area via redundant network connections.

The bandwidth should be billed based on utilisation. Internet connectivity is to be guaranteed with a robust Service Level Agreement for network availability and performance.

Facility Staff

On-site technical staff should be able to provide the following services on a 24x7x365 basis

· Server rebooting or power cycling

· Visual inspection

· Carrying out basic commands, given detailed instructions

· Installing equipment into racks

· Cable patching

· Changing tapes or other removable media

Server & Network Monitoring

All servers and network equipment should be externally monitored automatically, by polling the hardware interface of each component, sufficient to identify within a maximum of five minutes when a problem occurs.

Notification

In the event that a condition requiring notification occurs, then this must be possible through email, telephone, pager as well as other means.

Bandwidth Reports

Utilisation reports on the level of bandwidth used should be available on a regular basis, at least monthly, with interim reports if required. These should be capable of providing further substantial detail if required.

Administration

Telephone

support must be available 24 hours a day, supported by a fully integrated problem

management process to identify and track problems.

Technical Engineering Support

Skilled hosting engineers, network engineers and onsite operations support should be available ona 24x7x365 basis.

Service Level Guarantee

Routine and Scheduled Maintenance

No interruption for routine or scheduled maintenance is acceptable, nor would any activity that would result in the facility operating in an unprotected mode.

IBM will be looking at how the hosting of the Registry can be done and how it can be subcontracted out.

D15.2.2 Registry-Registrar Protocol and interface

Interface to the Registry

The configuration of the system for registrations is designed to give the Registrars a problem free, secure and fair access to registrations.

· The firewall and the VPN cards guarantee security, allowing the Registry and the Registrar to perform transactions with greatly reduced risk of sensitive data being compromised.

· The load balancer allows the traffic to be equally distributed over several hardware components, reducing the risk of downtime caused by hardware or software failure in the Registry servers. Also, the capacity for registrations can be increased. If there is a traffic peak that is higher than the Registry can handle in real time, this traffic can be buffered in the Registry servers until the database is available, given that the peak is not so high that all the Registry servers are overloaded. If this is the case, more Registry servers can easily be added.

· Transactions from the Registry servers are passed along and queued on the Registry Logic Unit (RLU) in a controlled manner, making sure that the RLU is never overloaded. The RLU queues the transactions with one queue per Registrar. The transactions will be chosen from each queue in a round robin fashion, skipping empty queues. This allows for a fair distribution of transactions from each Registrar under periods of high load.

A new, Stateless

Registry-Registrar Protocol

GNR is

proposing a new protocol for the communication between the Registry and the

multiple Registrars. This new protocol, called the Stateless Registry-Registrar

Protocol, or SRRP, is designed to overcome most of the known problems with the

existing RRP in use today, as defined in RFC 2832. The SRRP is suggested as a

supplement to the RRP and the Registry will encourage the Registrars to use

this protocol for communications with the Registry, although a supplementary

interface will exist for the Registrars to use their existing RRP

implementations.

The

protocol suggested herein will in the case of delegation from ICANN be

submitted as a new RFC, as in Appendix D.5.

In order

to promote usage of SRRP, the Registry will provide the Registrars with APIs to

the protocol to make it easy and fast to implement. Given its stateless nature,

it is easier to implement for new Registrars than the RRP, and have advantages

also in other areas:

· Transfer commands may be approved by the Registrant through the domain password, and domain transactions may be performed without approval from the Registrar, from which the domain is being transferred .

· The protocol uses coordinated universal time (UTC) for all operations.

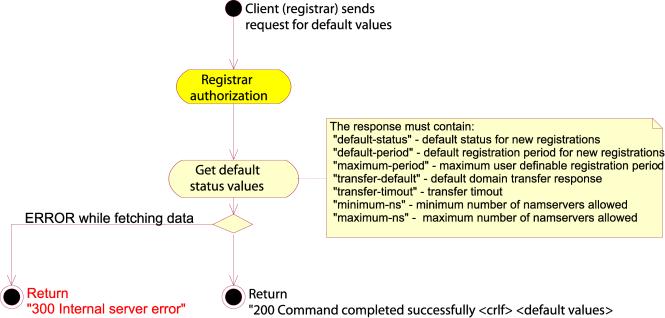

· The client may discover system defined default values and limits

· The protocol provides the client with complete idem potency, and repeated commands will not alter data additionally.

· It puts less strain on the server by using a stateless protocol model and moving some of the complexity to the clients.

· The protocol is designed to minimize the number of database transactions in order to keep the performance high.

GNR will

in the case of delegation submit the protocol for review as a RFC (attached).

Abstract of the protocol

The

purpose of SRRP is to provide a stateless service for communications between

the Registrar and the Registry. The design goal of the protocol is to provide a

consistent service for a very large number of clients by not maintaining client

state information on the server, and to reduce the policy enforcements done by

the protocol to a minimum.

The

protocol describes the communications between a Registrar, normally acting on

behalf of a Registrant, and the Registry. The Registrar may perform operations

such as creating domains, creating logical entities of name servers, assigning

name servers to a domain, transferring a domain and querying the server for

information about name server entities, domains or the server itself.

The SRR

protocol is intended to fix several shortcomings of the RRP defined by NSI in

RFC2832 by removing some of the less frequently used features and using a

stateless protocol model. The goals of the protocol are:

·

Provide

only the strictly required functionality

·

Provide

a completely stateless service

·

Provide

service to a very large number of clients

·

Be

implementation and performance friendly

Terminology used

·

The

“request message” or “client request” is the message sent from the client to

the server, and consists of a one line “request header” and a multi line

“request body”.

·

The

“response message” or “server response” is always the response to a request

message, and is sent from the server to the client. It consists of a one line

“response header” and possibly a “response body”.

·

“LWS”,

linear white space, is any combination of ASCII space (SP) and ASCII tabulator

(TAB) characters.

·

“CRLF”

is one ASCII carriage return (CR) character followed by one ASCII line feed

(LF) character.

·

An

“attribute/value pair” consists of a short textual string, termed “attribute”,

an ASCII ‘=’ character, and another string, termed “value”. The attribute/value pair is terminated by a

CRLF sequence, and thus a line may only contain one attribute/value pair.

·

The

“client” is the Registrar’s client software, and likewise the “server” is the

Registry’s server software.

·

An

“object” is a set of attribute/value pairs that the server operates on.

Currently there are two kinds of objects: domain objects and cluster objects.

The key

words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD

NOT”, “RECOMMENDED”, “MAY”, and

“OPTIONAL” in this document are to be interpreted as described in RFC 2119 [1].

Protocol model

The

protocol is a simple one way client-server protocol using a textual format for

easier debugging. A transaction is always initiated by the client, and the

server must answer every valid request message with a response message

containing a response code indicating the outcome of the client request. A well

behaved client SHOULD wait for a response from the server before it issues a

new request.

The

messages should contain only printable ISO-8859-1 characters, ie. characters in the range 31-126 and 160-255,

inclusive. Support for other characters sets or binary data are not supported

in the current version of SRRP, but may be added later by using a character

encoding.

The

Registrar is identified to the Registry by an attribute/value pair in the

request body, and authenticated by a similar attribute/value pair. As the protocol does not itself provide any

other security measures, the client MUST connect to the server using a secure,

reliable communication method such as SSL [2] or an encrypted tunnel.

Protocol

objects

The

domain objects are a logical grouping of attribute/value pairs that are

manipulated using SRRP.

Domain objects

The

domain object is a collection of information defining a registered domain in

the system. The domain object should contain the following attributes:

·

Exactly

one “registrar-id” attribute identifying the owner of the object.

·

Exactly

one “domain-name” attribute containing the name of the domain.

·

Exactly

one “expiry-date” attribute containing the expiry date for the registration. If

the client does not specify a date, a system default should be used.

·

Exactly

one “status” attribute defining the current status of the object.

·

This

should be set to a system default if not specified by the client.

·

Exactly

one “expiry-date” attribute containing the expiry date for the registration. If

the client does not specify a date, a system default should be used.

·

Exactly

one “cluster-id” attribute identifying a cluster object for this domain object.

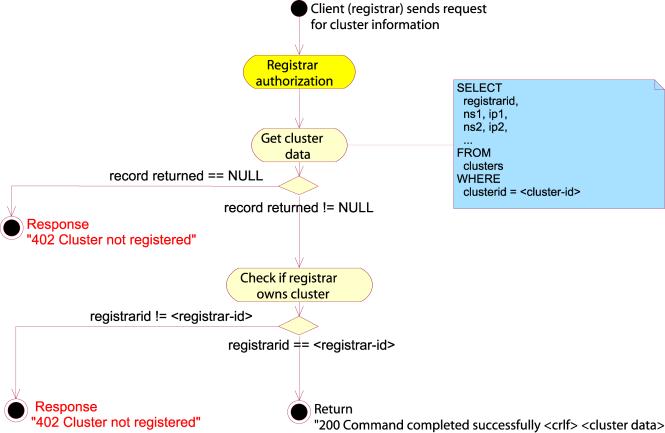

Cluster objects

The

purpose of the cluster object is for the Registrar to create a single object to

which the Registrar can attach domain names, thereby facilitating the handling

of large volumes of domain names using the Registrar’s DNS servers.

The

cluster object is a collection of name server information. Both the name and

the address of the name server are stored for every name server in the cluster.

The name servers are stored in attributes starting with “nsi-“ where “i” is any

positive integer starting with one (1), possibly limited by the server. For

instance, the first name server in a cluster object will have its IP address in

the attribute “ns1-address” and its name in the attribute “ns1-name”. This pair

is termed the “name server entry”.

The

client should store the name servers in increments of one, as the server MAY

choose to stop looking for name servers when it finds an empty name server

entry, thereby assuming that the name server cluster is full.

The

cluster object consists of any number of name server entries starting with

“nsi-“ where i is a positive integer starting with one (1) and increasing with

increments of one (1) for every name server entry.

Request

message format

The

issued request message consists of a header line containing the command to be

performed, a command argument and the version number of the protocol. These

fields are separated by one or more LWS characters, and the header line is

terminated by one CRLF character sequence.

Following

the header line, the client may add one or more lines of attribute/value pairs,

the request body. While the protocol does not require the client to issue any

attribute/value pairs, the authentication credentials are specified using

attribute/value pairs in the request body, and these are required by every

command currently specified. The order of the attribute/value pairs in the

request body is arbitrary.

The

request message is terminated by the ASCII end of file (EOF) character, and the

server MUST disconnect from the client whenever it encounters EOF.

Example

request message:

CREATE DOMAIN SRRP/1.0

registrar-id=123456789

registrar-auth= pass-phrase

domain-name=example.com

status=inactive

cluster-id=987654321

Please

note the usage of ‘=’ and space characters in the registrar-auth attribute

value. This is valid because there must be exactly one attribute/value pair on

every line, and everything from the first ‘=’ up to the CRLF is considered part

of the attribute value.

Response

format

For

every valid request message received from a client, the server MUST issue a

response message starting with a one line header containing a valid response

code and a short description of the response code, separated by one or more LWS

characters and terminated by a CRLF sequence.

If the

client request was completed successfully and the server needs to send

additional information in the response message, it must send this information

in one or more lines of attribute/value pairs contained in the response body.

The response body is terminated by an EOF character, also marking the end of

the response message. If the command failed, the response code is a 3XX

(Temporary failure) or 4XX (Permanent failure), the server MAY add one or more

“text” attributes in the response body further describing the error condition.

The

response body for a successful command MUST contain only attributes defined for

that particular command. The order of the attributes in the response body is

arbitrary with one exception: the order of the special “text” attribute is

important as these are used for human readable data. The server MUST send the “text” attributes in the order they are

stored or retrieved, and likewise the client MUST read them in the order

received.

Example

response message for a QUERY CLUSTER command:

200 Command completed successfully

ns1-address=192.168.4.5

ns1-name=ns1.example.com

ns2-address=192.168.4.6

ns2-name=ns2.example.com

ns3-address=10.10.56.11

ns3-name=ns1.example.net

The

response code of 200 indicates that the command was successfully completed, and

the response body contains the data returned from the command, which is a set

of attribute/value pairs.

Example

response message for a QUERY DOMAIN command:

200 Command completed successfully

domain-name=example.com

registrar-id=123456789

expiry-date=2003-02-09

created-date=2001-02-09

cluster-id=987654321

status=active

text=Last-change: CREATE DOMAIN

text=Changed-date:2001-02-13 10:15:12 UTC

text=Changed-by: registrar 123456789

This is

a more complex response, containing both normal attributes and ordered “text”

attributes. If the domain did not exist, the response would be a 401 Domain not

registered, possibly with one or more “text” attributes giving a human readable

explanation of the error.

Client

requirements

The

client MUST NOT make any assumptions about the length of the value pairs. The

ordering of the attributes is irrelevant except for the “text” attribute where

the client MUST keep the order.

Server

requirements

The

server SHOULD issue a response message to every well formed client request

message. A client request message is considered well formed when it contains an

initial header line consisting of three fields separated by one or more LWS

characters, and the last value is recognized as an SRRP protocol version

number. If the client request message is not well formed, the server MUST drop

the connection immediately.

The

server MUST answer the client request with a response message using the same

version of SRRP as the client request message. If the server is unable to answer

the request using the same protocol version as the client, it must issue a 413

Unsupported protocol version message.

SRRP commands

This

section contains the commands defined for use in client request messages and

their expected response. All of these messages MUST contain a “registrar-id”

attribute identifying the Registrar issuing the command, and a “registrar-auth”

authenticating the Registrar. Clients may only view and/or change their own

objects, and attempts to operate objects belonging to other Registrars should

result in a 411 Access denied error message.

Note

that the ordering of the attribute/value pairs is not significant except for

the “text” attributes.

CREATE

The

create commands are used for adding an object to the Registry. In the current release

of SRRP, the “domain” and “cluster” object types are supported, containing a

domain registration and a series of name server registrations, respectively.

CREATE DOMAIN

The

CREATE DOMAIN command attempts to register the domain name contained in the

“domain-name” attribute in the request body.

The

request body MAY also contain any of the following attributes:

·

Exactly

one “expiry-date” attribute giving the requested expiration date of the

registration..

·

Exactly

one “cluster-id” attribute pointing to a cluster object containing the name

servers for this domain..

·

Exactly

one “status” attribute giving the current status of the domain.

·

Exactly

one "domain-auth" attribute containing a Registrar assigned password for this domain.

·

Zero

or more (possibly server limited) name server entries each consisting of the

attributes “nsi-address” and “nsi-name” where “i” is a positive integer.

If the

user specifies any name server entries, the server must attempt to create a

cluster object for these. If successful, it MUST return the following

attribute/value pairs:

·

Exactly

one “cluster-id” attribute containing the cluster ID of the newly created

cluster object. The client must store this value as it is the only way of

keeping track of the cluster object.

·

Exactly

one “expiry-date” attribute containing the expire date for the domain.

·

Exactly

one “status” attribute containing the status of the domain.

Note

that the server may limit the minimum and/or maximum number of nameservers the

user is allowed to specify. The server should notify the client of any

limitations on the number of name servers in the STATUS DEFAULTS response body.

If the

client specifies both a “cluster-id” attribute and any number of name server

entries, the server SHOULD ignore the name server entries and use the cluster

ID.

If the

cluster ID does not exist in the system, the response message should be a 402

Cluster not registered. If the expiry date is invalid, the response message

should be a 405 Invalid expire date. If the “status” attribute contains an

unknown status value, the response message should be a 404 Invalid attribute

value. If the client specified too few or too many name servers, the server

should respond with a 406 Invalid number of name servers error message. If the

client attempts to register a domain which is blacklisted, the server should

issue a 409 Blocked domain error message. If the client does not have the

necessary credit to register a domain, the response message should be a 410

Credit failure error.

Examples:

CREATE DOMAIN SRRP/1.0

registrar-id=123456789

registrar-auth= pass-phrasecluster-id=987654321

domain-name=example.com

In this

example, the Registrar 123456789 adds the domain example.com using the default

expiry date and status and a pre-defined cluster object.

CREATE DOMAIN SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

domain-name=example.com

ns1-address=114.72.91.131

ns1-name=ns1.example.com

ns2-address=114.72.91.132

ns2-name=ns2.example.com

Here,

the Registrar specifies two name servers in the request body. If the number of

name servers (two) is valid, the response might look like this:

200 Command completed successfully

cluster-id=987654321

expiry-date=2004-03-12

status=active

The

client would now own the cluster object identified by 987654321 containing the

two name servers ns1.example.com and ns2.example.com and their IP addresses.

Figure 36: Create Domain

CREATE CLUSTER

Cluster

objects for name servers may be added by using the CREATE CLUSTER command. A

number of name server entries each consisting of the attributes “nsi-address”

and “nsi-name” where “i” is a positive integer. The minimum and/or maximum

number of name server entries may be limited by the server, and the server

should show these limits in the STATUS DEFAULTS response body. The server must

create a cluster object for this client, and respond with a “cluster-id”

attribute in the response body containing the ID of the newly created cluster

object. The client must store the cluster ID as this is the only way of keeping

track of the cluster object.

If the

client specified too few or too many name servers, the server should respond

with a 406 Invalid number of name servers error message.Example:

CREATE CLUSTER SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

ns1-address=114.72.91.131

ns1-name=ns1.example.com

ns2-address=114.72.91.132

ns2-name=ns2.example.com

ns3-address=114.72.91.133

ns3-name=ns3.example.com

A

typical response message would look like this:

200 Command completed successfully

cluster-id=987654321

The

cluster identified by the cluster ID 987654321 is now assigned to the Registrar

identified by the Registrar ID 123456789, and contains three name servers.

Figure 37: Create cluster

SET

The SET

command is functionally equivalent to the CREATE command, except for that it

will overwrite any previous data contained in the attribute.

SET EXPIRE

If not

specified, the expiry date is set to a system default time, ie. a year after the registration was performed.

However, the Registrar may change the expiry date himself by issuing an SET

EXPIRE command with the domain in the “domain-name” attribute and the requested

expiry date in the “expiry-date” attribute. The previous expiry date of the

domain object will be overwritten by the new one.

The

value of the “expiry-date” attribute should be the year month and day of the

requested registration expiry date, specified with a four digit year number, a

two digit month number and a two digit day number, separated with ASCII ‘-‘

characters. The client MUST specify the expiry date in UTC (Universal Time

Coordinated).

The

system may have an upper limit of the length of a registration, and if the

Registrar attempts to set an expiry date past this boundary, the server must

respond with a 405 Invalid expire date error message.

Example:

SET EXPIRE SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

expiry-date=2007-06-04

domain-name= example.com

This

will set the expire date of the domain example.com to June 2007.

Figure 38: Set expire

SET CLUSTER

The SET

CLUSTER combination will specify a cluster of nameservers, identified by the

“cluster-id” attribute in the request body, for the domain object specified by

the “domain-name” attribute.

If the

domain and/or cluster object is unknown, the server must respond with a 401

Domain not registered error message. If

the cluster object is unknown, the server must respond with a 402 Cluster not

registered error message.

Example:

SET CLUSTER SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

cluster-id=987654321

domain-name=example.com

Here the

client will change the “cluster-id” attribute for the domain example.com to

987654321, if both the domain and cluster objects exist.

Figure 39: Set cluster

SET STATUS

The

client may change the status of a domain object by using the SET STATUS

command. The following values are valid:

·

“inactive”

signaling that the domain is not active.

·

“active”

signaling that the domain is active.

Example:

SET STATUS SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

domain-name= example.com

status=inactive

In this

example, the client deactivates the domain “example.com” by setting its status

to “inactive”.

i

Figure 40: Set status

SET NAMESERVERS

The SET

NAMESERVER command is used for changing all of the name server entries in a

cluster object. The request body should contain exactly one “cluster-id” object

identifying the cluster object and a number of name server entries defining the

new name servers for the cluster object.

The name server entries consist of the attributes “nsi-address” and

“nsi-name” where “i” is a positive integer. The minimum and/or maximum number

of name server entries may be limited by the server, and the server should show

these limits in the STATUS DEFAULTS response body.

The new

name server entries should completely replace all previous name server entries.

If the

cluster ID does not exist in the system, the response message should be a 402

Cluster not registered. If the client specified too few or too many name

servers, the server should respond with a 406 Invalid number of name servers

error message.

Example:

SET NAMESERVERS SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

cluster-id=987654321

ns1-address=171.81.19.159

ns1-name=ns1.example.com

ns2-address=171.81.19.160

ns2-name=ns2.example.com

This

will completely remove any name server entries from the cluster object in

question, and replace them with the two name servers above.

Figure 41: Set nameservers

SET PASSWORD

The

client may change the domain password of a domain object using the SET PASSWORD

command. The new domain password should be given in the "domain-auth"

attribute.

The

purpose of the domain password is to authorize domain transfers between

registrars. The transfer request message should contain the domain password for

the requested domain, and the server should only perform the transfer when the

password is correct. Example:

SET

PASSWORD SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

domain-name=example.com

domain-auth=domain-pass-phrase

domain-pw-set=new-domain-pass-phrase

DELETE

The

DELETE command is used for deleting objects.

DELETE DOMAIN

The

DELETE DOMAIN command will attempt to delete a domain object. The request body

must contain exactly one “domain-name” attribute specifying the domain to be

deleted.

If the

domain object cannot be found, the server must respond with a 401 Domain not

registered error message.

Example:

DELETE DOMAIN SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

domain-name=example.name

This

will delete the domain example.name provided that the Registrar attempting the

operation has the proper authorization.

Figure 42: Delete domain

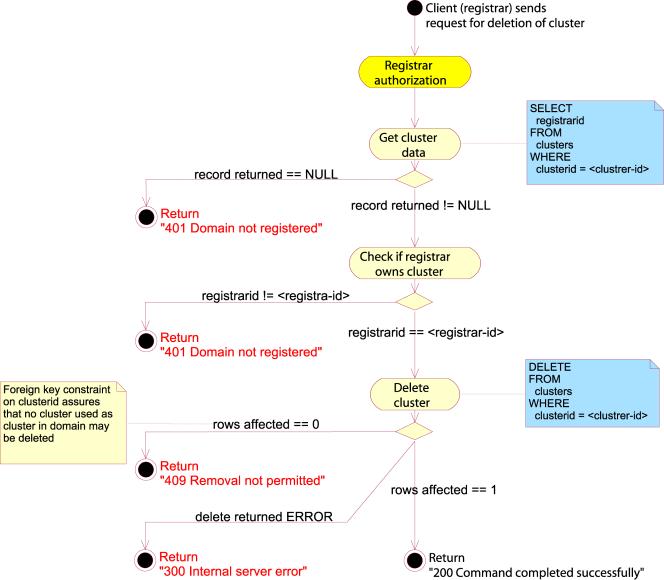

DELETE CLUSTER

The

DELETE CLUSTER command will attempt to delete a cluster object. The request

body must contain exactly one “cluster-id” attribute identifying the cluster

object to be deleted.

If the

cluster object cannot be found, the server must respond with a 402 Cluster not

registered error message. If a client attempts to delete a cluster object,

which is in use by one or more active domain objects, the server should return

a 408 Removal not permitted error message. The client will have to assign

another cluster ID to the domain objects using this cluster object, or set

their status to “inactive” before attempting the operation again.

Note

that all the name server attribute groups contained within the cluster object

will be deleted too.

Example:

DELETE DOMAIN SRRP/1.0

registrar-id=123456789

registrar-auth=pass-phrase

cluster-id=987654321

This

will delete the cluster object identified by the “cluster-id” attribute.

Figure 43: Delete cluster

QUERY

The

QUERY commands are used for fetching all the available information about an

object.

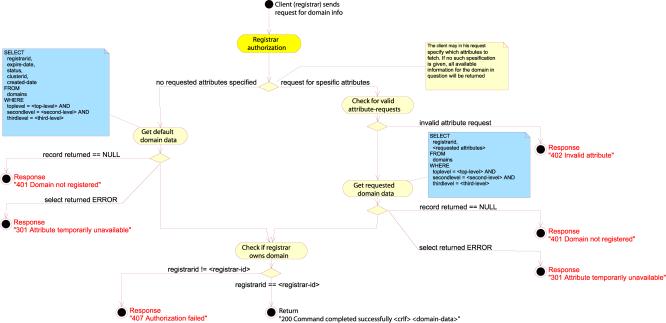

QUERY DOMAIN

The

QUERY DOMAIN command will attempt to retrieve some or all of the information

for a domain. The request message must contain exactly one “domain-name”

attribute giving the name of the domain object to query, and zero or more

“get-specific” attributes naming the specific attributes to fetch.

If no

“get-specific” attributes are present in the query, the server must return all

available information for the domain object. If one or more “get-specific”

attributes are specified, the server must return the values of all the

attributes named by the “get-specific” attributes or an error message.

If the

server is unable to return the required information, it must return a 301 Attribute

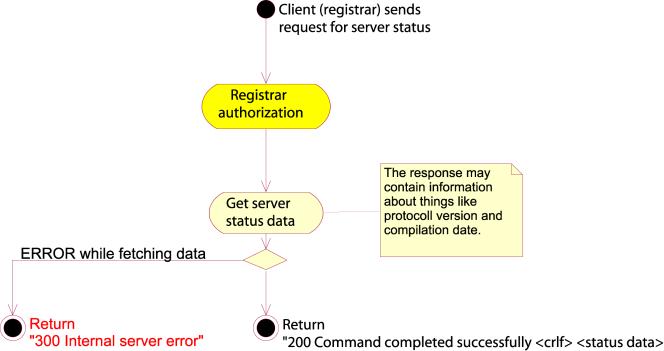

temporarily unavailable. If one or more of the “get-specific” attributes