III.2 Technical Plan For The Proposed Registry Operations (RFP Section 15.2)

JVTeam’s proposed technical solution for registry operations meets ICANN’s (and Internet users’) requirements for a new TLD as follows:

Introducing Competition—JVTeam will develop and deploy a new, streamlined registry-registrar protocol: the extensible registry protocol (XRP). The XRP provides more features and functionality than the existing registry/registrar interface, and far greater security. The benefits to the Internet community are greatly improved Internet stability and increased public confidence. JVTeam will work with the Internet Engineering Task Force (IETF) to bring the protocol to standard status.

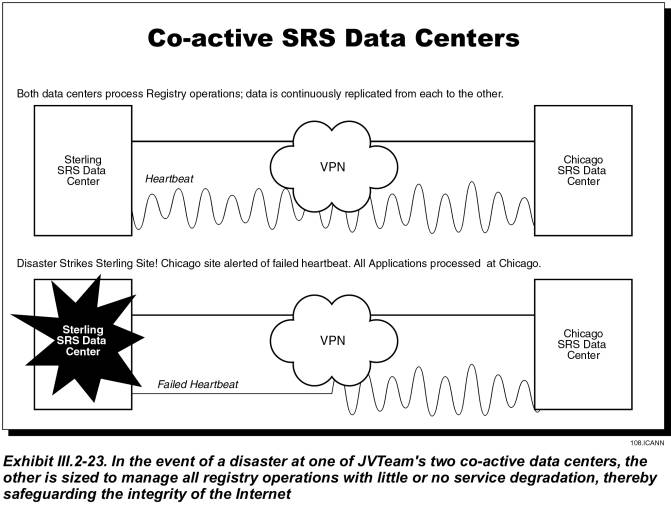

Improving Registry Reliability—JVTeam will implement co-active data centers and a number of nameserver data centers to create a resilient infrastructure protected against outages through redundancy, fault tolerance, and geographic dispersion. The benefits to the Internet community are improved registry availability and better access to DNS services.

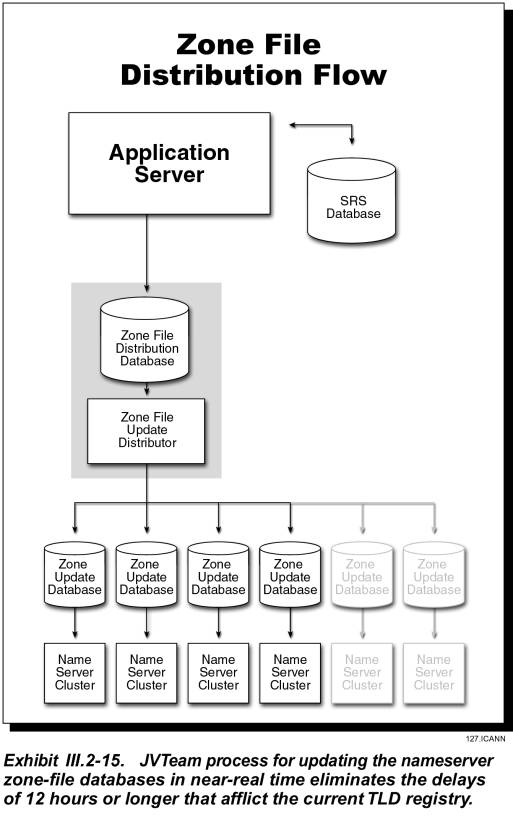

Providing Real-Time Responsiveness—JVTeam will implement near-real-time updates to the zone files and the Whois database. The benefit to the Internet community is the elimination of delay-caused confusion over domain name registrations.

Eliminating Bottlenecks—JVTeam’s high-availability cluster architecture provides scalable processing throughput, dynamic load balancing between the two data centers, and multiple high-speed Internet connections. The benefit to the Internet registrar community is the elimination of registry bottlenecks.

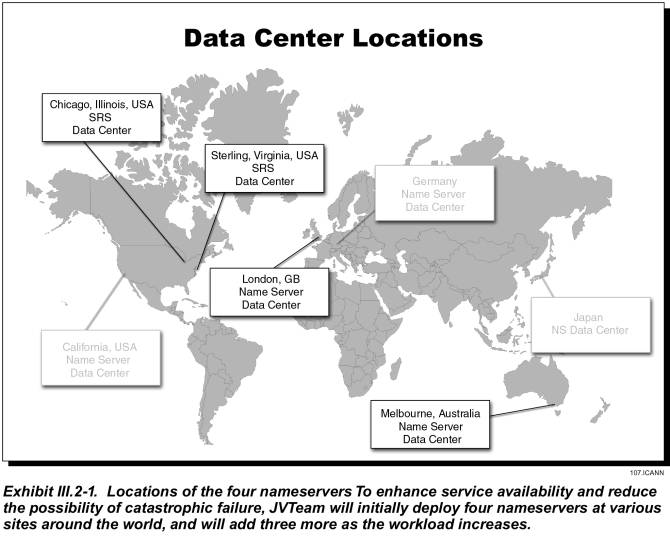

JVTeam’s proposed TLD technical solution is based on our experience with the Number Portability Registration Center (NPRC) and with .com.au registry operations. Our technical solution consists of co-active registry data centers and nameserver data centers, geographically dispersed to provide protection against natural and man-made disasters. Section III.2.1 provides an overview of our proposed facilities and systems; subsequent sections expand this overview into a comprehensive technical plan for registry operations.

III.2.1 General Description Of Proposed Facilities And Systems (RFP Section D15.2.1)

JVTeam proposes world-class redundant Shared

Registration System (SRS) Data Centers in Sterling, Virginia and Chicago,

Illinois and four nameserver sites in Phase I that will provide the facilities

and infrastructure to host the new TLD Registry. Our facility locations were

selected to give wide geographic separation and provide resilience against

natural and man-made disaster scenarios. The benefit to ICANN and the Internet

community is reliable non-stop TLD registry operations.

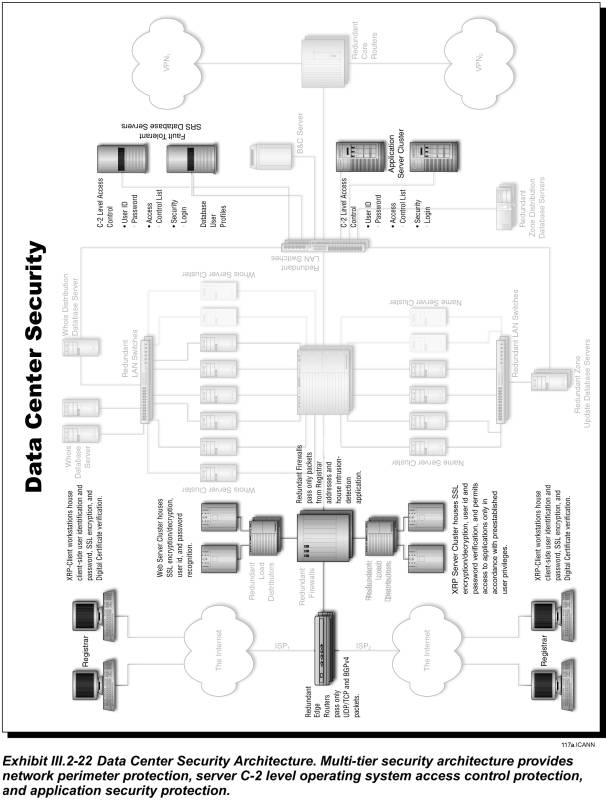

ICANN’s priorities for the new TLD registries are to provide a world-class level of services that preserve both the stability of the Internet and the security and reliability of the existing domain name system. JVTeam has developed a fault tolerant architectures including redundant facility implementation, high availability cluster server architectures, fault tolerant database technology, and redundant alternate routed network connectivity supports mission critical service availability now. The Internet community needs to be able to depend on the Internet as a stable, highly available infrastructure for worldwide collaboration and commerce.

In the subsection that follows we describe where the JVTeam facilities are located and provide a functional description and physical description of the Shared Registration System (SRS) data center and the nameserver sites. In subsequent subsections we provide a detailed system description of each of the systems residing within these facilities.

II.2.1.1 Registry Facilities Site Description

This section describes JVTeam’s proposed TLD Registry architecture consisting of redundant SRS data centers and multiple nameserver sites to provide a seamless, responsive, and reliable registry service to registrars and Internet users. As shown in Exhibit III.2-1 our TLD registry redundant SRS and nameserver data center sites are geographically dispersed worldwide and interconnected with a Virtual Private Network (VPN) to provide worldwide coverage and protect against natural and man-made disasters and other contingencies. The facility locations are provided in the following table.

|

Site Name |

Site Address |

|

Four Data Centers in Phase I |

|

|

JVTeam SRS Data Center and nameserver Site |

200 South Wacker, Suite 3400 |

|

JVTeam SRS Data Center and nameserver Site |

45980 Center Oak Plaza |

|

JVTeam nameserver Site |

Melbourne |

|

JV Team nameserver Site |

London |

|

Planned Data Centers for Phase II |

|

|

JVTeam

Nameserver Site |

Japan |

|

JVTeam

Nameserver Site |

California |

|

JVTeam

Nameserver Site |

Germany |

Our proposed TLD Registry Service Level Agreement (SLA) provides service levels commensurate with mission critical services for availability, outages, response time, and disaster recovery. Highlights of the SLA include:

· SRS Service Availability is guaranteed at 99.95%, with a design goal of 99.99% per year.

· Nameserver Service Availability is guaranteed at 99.999%

III.2.1.1.1 Shared Registration System (SRS) Data Center Functional Description

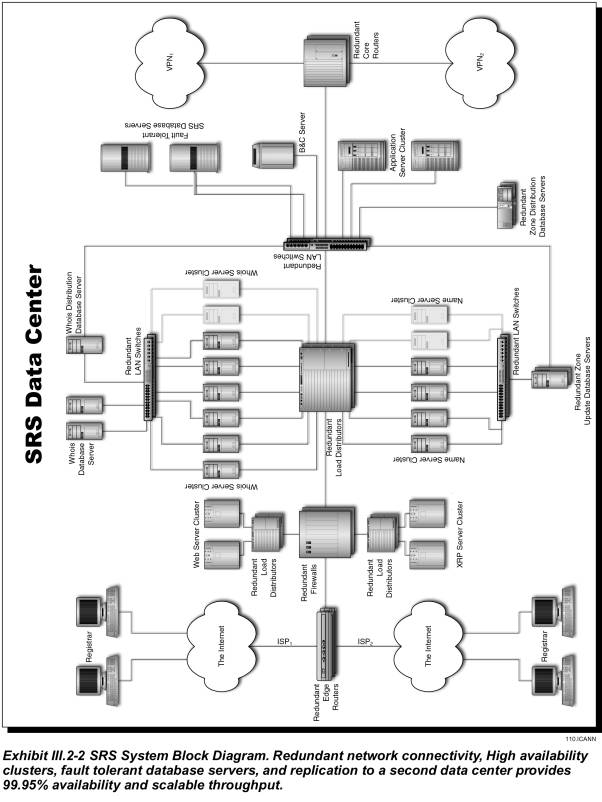

High availability registry services can only be provided from facilities that have been designed and built specifically for such a critical operation. The JVTeam SRS data centers incorporate redundant uninterruptible power supplies; high-capacity heating, ventilation, and air conditioning; fire suppression; physical security; C2 level information system security; firewalls with intrusion detection; redundant, high availability cluster technology; and redundant network and telecommunications architectures. When selecting the sites, we considered their inherent resistance to natural and man-made disasters. The functional block diagram of our SRS data center is depicted in Exhibit III.2-2. As can be seen from the referenced exhibit the registry SRS data center is highly redundant and designed for no single point of failure.

Each SRS data center facility provides the functions listed in the system

function directory table below. Descriptions of the SRS systems providing these

functions are provided in the next subsection.

|

SHARED REGISTRATION SYSTEM (SRS) FUNCTION DIRECTORY |

|

|

System Function |

Functional Description |

|

Web Server |

High capacity Web

Servers provide secure web services and information dissemination that is

outside the scope of the XRP protocol. It contains a registry home page to

enable registrars to sign in and inquire about account status, get downloads

and whitepapers, access frequently asked questions, obtain self help support,

or submit a trouble ticket to the TLD Registry Help Desk. |

|

Protocol (XRP) Servers |

XRP transactions

received from registrars undergo front-end processing by the XRP server that

manages the XRP session level dialog, performs session level security

processing, and strips out transaction records. These XRP transaction records

are sent to the SRS data center application server cluster for security authentication

and business logic processing. |

|

Application Servers |

Processing of the

XRP applications business logic, user authentication, posting of inserts, deletes,

updates to the master database, and interfaces to authentication, billing and

collections, backup, and system/network administration. |

|

SRS Database Servers |

The SRS database

maintains registry data in a multi-threaded, multi-session database for building

data-driven publish and subscribe event notifications and replication to

downstream data marts such as the Whois, Zone, and Billing and Collection services. |

|

Whois Distribution Database |

The Whois

Distribution Database is dynamically updated from the SRS database and

propagates the information to the Whois Database clusters. |

|

Whois Database Clusters |

The Whois Database

is dynamically updated from the Whois Distribution Database and sits behind

the Whois Server clusters. The Whois

Database clusters are used to lookup records that are not cached by the Whois

Servers. |

|

Whois Servers |

The Load Balanced

Whois Server Clusters receive a high volume of queries from Registrants and

Internet users. The Whois service returns information about Registrars,

domain names, nameservers, IP addresses, and the associated contacts. |

|

Zone Distribution Database |

The Zone

Distribution Database is dynamically updated from the registry SRS database

and propagated to the nameserver sites located worldwide. It contains domain

names, their associated nameserver names, and the IP addresses for those

nameservers. |

|

Billing and Collection |

A commercial off

the shelf system is customized for registry specific eCommerce billing and

collection functions that are integrated with XRP transaction processing, the

master database and a secure web server. The system maintains each registrar’s

account information by domain name and provides status reports on demand. |

|

Authentication Services |

Authentication

Service uses commercial x.509 certificates and is used to authenticate the

identity of entities interacting with the SRS. |

|

Backup Server |

Provides backup

and restore of each of the various cluster servers and database servers files

and provides a shared robotic tape library facility for central backup and

recovery. |

|

Systems/Network Management

Console |

Provides system

administration and simple network management protocol (SNMP) monitoring of

the network, LAN-based servers, cluster servers, network components, and key

enterprise applications including the XRP, Web, Whois, Zone, Billing and Collections,

Backup/Restore, and database application. Provide threshold and fault event

notification and collects performance statistics. |

|

Applications Administration

Workstations |

Provides

client/server GUI for configuration of SRS applications including XRP, Web,

Billing and Collection, Database, Authentication, Whois, Zone, etc. |

|

Building LAN |

Provides dual

redundant switched 1000BaseTX/FX Ethernet LAN-based connectivity for all network

devices in the data center |

|

Firewall |

Protects the

building LAN from the insecure Internet via a Firewall that provides policy-based

IP filtering and network-based intrusion detection services to protect the

system from the Internet hacking and denial of service attacks. |

|

Load Balancers |

Dynamic Feedback

Protocol (DFP) – based load balancing of TCP/IP traffic in a server cluster

including common protocols such as least connections, weighted least connections,

round robin, and weighted round robin. |

|

Telecommunications Access |

Dual-homed access

links to Internet Service Providers (ISPs) and Virtual Private Network (VPN)

services are used for connectivity to the Internet and the JVTeam Registry

Management Network. |

|

Central Help Desk |

A single point of

contact telephone and Internet-Web help desk provides multi-tier technical

support to registrars on technical issues surrounding the SRS. |

III.2.1.1.2 Nameserver Sites Functional Description

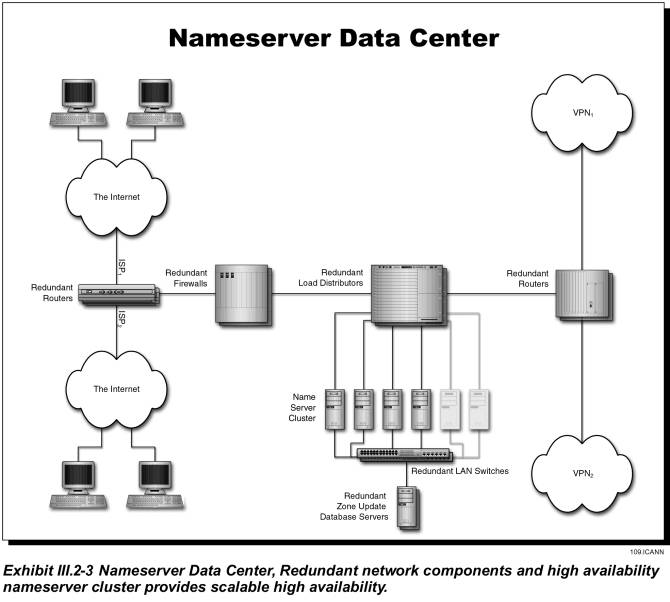

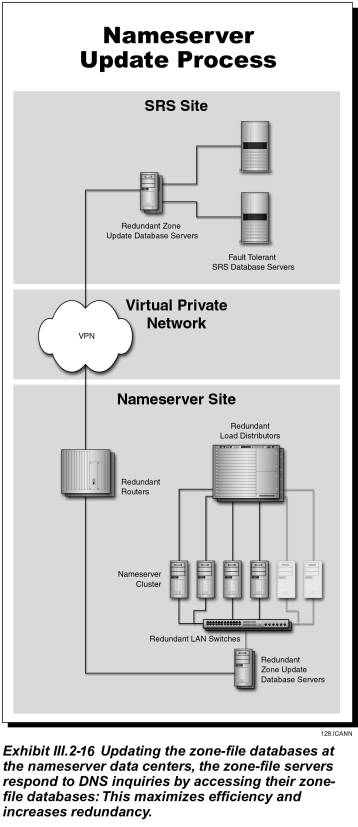

As discussed above, two nameserver sites are co-located at our SRS Data Centers and the remaining two nameservers System sites in Phase I are geographically dispersed with dual homed Internet and VPN local access telecommunications links to provide resilience and disaster recovery. The two additional nameservers sites will be installed in Data Centers in Melbourne, Australia and London, England. In phase II we plan to install additional nameserver data centers in Japan, California and Germany; if required to handle DNS query load. The functional block diagram of our nameserver sites is depicted in Exhibit III.2-3. As can be seen from the exhibit the nameserver sites are configured to be remotely managed and operated “lights out”. The hardware configuration is highly redundant and designed for no single point of failure.

The following function directory table lists the nameserver functions. Descriptions of the systems providing these functions are provided in the next subsection.

|

NAMESERVER FUNCTION DIRECTORY |

|

|

System Function |

Functional Description |

|

Zone Update

Database |

The SRS Zone Distribution Database is propagated to the

Zone Update Database Servers at the nameserver sites located worldwide. Information propagated includes domain

names, their associated nameserver names, and the IP addresses for those nameservers. |

|

Nameserver |

The nameserver

handles resolution of TLD domain names to their associated nameserver names

and to the IP addresses of those nameservers. The nameservers are dynamically

updated from the Zone Update Database.

Updates are sent over the VPN Registry Management Network. |

|

Building LAN |

Provides dual redundant switched 1000BaseTX Ethernet LAN-based connectivity for all network devices in the data center |

|

Firewall |

Protects the building LAN from the insecure Internet via a Firewall that provides policy-based IP filtering and network-based intrusion detection services to protect the system from the Internet hacking and denial of service attacks. |

|

Load Balancers |

Dynamic Feedback

Protocol (DFP) – based load balancing of TCP/IP traffic in a server cluster

including common protocols such as least connections, weighted least connections,

round robin, and weighted round robin. |

|

Telecommunications Access |

Dual-homed access links to Internet Service Providers (ISPs) and Virtual Private Network (VPN) services are used for connectivity to the Internet and the JVTeam Registry Management Network. |

III.2.1.1.3 SRS Data Center and Nameserver Buildings

Each JVTeam data center facility is located in a modern, fire-resistant building that offers inherent structural protection from such natural and man-made disasters as hurricanes, earthquakes, and civil disorder. Sites are not located within a 100-year flood plain. Facilities are protected by a public fire department, and have their internal fire-detection systems connected directly to the fire department.

Data centers are protected from fire by the sprinkler

systems of the buildings that house them. Furthermore, each equipment room is

protected by a pre-action fire-suppression system that uses Inergen gas as an

extinguishing agent.

The environmental factors at the SRS Data Center and nameserver sites are listed in the following table.

|

Heating, ventilation, and air conditioning |

Dual redundant HVAC units control temperature and humidity. Either unit will maintain the required environment. |

|

Lighting |

2x2-foot ceiling-mounted fluorescent fixtures |

|

Control of static |

All equipment-mounting racks are grounded to the building’s system, and are equipped with grounding straps that employees wear whenever they work on the equipment. |

|

Primary electrical power |

208-volt, 700-amp service distributed through four power panels |

|

Backup power supply |

· 30 minutes of 130-KVA UPS power · 1000-KVA generator (SRS data center) · 250-KVA generator (nameserver data center) |

|

Grounding |

· All machines are powered by grounded electrical service · A 12-gage cable under the equipment-room floor connects all equipment racks to the building’s electrical-grounding network |

Building Security

In addition to providing physical security by protecting buildings with security guards, closed circuit TV surveillance video cameras, and intrusion detection systems, JVTeam vigilantly controls physical access to our facilities. Employees must present badges to gain entrance, and must wear their badges at all times while in the facility. Visitors must sign in to gain entrance. If the purpose of their visit is found to be valid, they are issued a temporary badge; otherwise, they are denied entrance. At all times while they are in the facility, visitors must display their badges and must be escorted by a JVTeam employee. Sign-in books are maintained for a period of one year.

Security Personnel

On-site security personnel are on duty 24 hours a day, 7

days a week to monitor the images from closed-circuit television cameras placed

strategically throughout the facilities. Security personnel are stationed at

each building-access point throughout normal working hours; at all other times

(6:30pm to 6:30am and all day on weekends and major holidays), individuals must

use the proper key cards to gain access to the buildings. Further, any room

housing sensitive data or equipment is equipped with a self-closing door that

can be opened only by individuals who activate a palm-print reader. Senior

facility managers establish the rights of employees to access individual rooms,

and ensure that each reader is programmed to pass only those authorized

individuals. The palm readers compile and maintain a record of those

individuals who enter controlled rooms.

III.2.1.2 Shared Registration System Descriptions

This section provides system descriptions of the JVTeam SRS Data Center site and the Nameserver Data Centers. We provide brief system descriptions and block diagrams of each functional system within the two sites and their network connectivity. The JVTeam registry system architecture central features are as follows:

· Co-active redundant data centers geographically dispersed to provide mission critical serviceavailability due to two-way database replication between the centers.

· Nameserver sites are designed with full redundancy, automatic load distribution, and remote management for “lights out” operation.

· A Virtual Private Network to provide a reliable, secure management network and dual homed connectivity between the data centers and the nameserver sites.

· Each SRS data center and nameserver site uses high availability cluster technology for flexibility, scalability, and high reliability.

· Registry systems are sized initially to handle the projected workload but can grow incrementally to accommodate workload beyond the current registry operations.

· The registry database uses fault tolerant server architecture and is designed for fully redundant operations with synchronous replication between the primary and secondary.

JVTeam is proposing moderate-level, mid-level, and high-end cluster server platforms for installation at each site. The servers are selected for applications depending on the requirements, storage capacity, throughput, interoperability, availability, and level of security. These server platform characteristics are summarized in the following table.

|

Platform |

Features |

Application |

|

Moderate-level Intel Server Clusters |

Rack-mounted

Intel 700 Mhz, 32-bit, 2 to 6-way SMP CPUs with 8 GB of ECC memory, CD ROM,

four hot-swap disk drives (9-36 MB each), redundant hot swappable power

supplies, dual attach 100 BaseT Ethernet Adapter, clustering and event management

software for remote management. Microsoft® Windows NT® 4.0, Windows® 2000; Red

Hat Linux 6.1, C-2 Controlled Access protection security |

· Nameserver Cluster · Whois Server Cluster · Backup Server · Network Management Server ·

Update

Servers (Zone/Whois) |

|

Mid-level RISC

Server Clusters |

Rack-mounted RISC

550 Mhz 2 to 8-way SMP, 64-bit CPUs, 32 GB ECC RAM, 72 GB internal disk

capacity, 71 TB external RAID, redundant hot swappable power supplies, dual

attach 1000 BaseTX/FX Ethernet Adapter, clustering and event management

software for remote management. Unix 64-bit operating system with C-2 Controlled

Access protection security |

· XRP Server · Web Server · Application Server Cluster · Billing & Collection Server · Authentication Server · Whois Database Server |

|

High-End RISC

Server Cluster |

RISC 550 MHz CPU,

64-bit 2 to 32-way cross-bar SMP with 8x8 non-blocking multi-ported crossbar,

32 GB ECC RAM, 240 MB/sec channel bandwidth, 288 GB Internal mass storage, 50

TB external RAID storage, redundant hot swappable power supplies, dual attach

1000 BaseTX/FX Ethernet Adapter, clustering and event management software for

remote management. Unix 64-bit operating system with C-2 Controlled Access protection

security |

Fault Tolerant

Server for database system |

III.2.1.2.1 SRS Data Center System Descriptions

As previously shown in Exhibit III.2-2 the SRS data centers provide co-active fully redundant system configurations with two-way replication over the high speed VPN Registry Management Network, a co-located complete nameserver, and dual-homed connectivity to the Internet Service Providers. Descriptions of each of the systems in the SRS Data Center site are as follows.

XRP Server Cluster

XRP transactions received from registrars over the Internet undergo front-end processing by the XRP Server which manages the XRP session level dialog, performs session level security processing, and strips out the transaction records. These XRP transaction records are sent to the SRS data center application server cluster for security authentication and business logic processing. The XRP server is a mid-level RISC SMP machine with local disk storage. It off-loads the front end processing of the XRP protocol and off-loads the extensive communication protocol processing, session management and SSL security encryption/decryption from the applications servers. The XRP server strips the fields out of the XML document transaction and builds XRP binary transaction packets that are sent to the application server for initial security authentication and log on with user id and password. Once the user is authenticated, the session is active and the XRP application server performs all business logic processing, billing, collection, and database operations.

Nameserver

A complete nameserver for DNS queries is co-located in each SRS data center site. As previously shown in Exhibit III.2-3 the nameserver consists of redundant Internet Service Provider (ISP) and Virtual Private Network (VPN) local access links to provide alternate routed connectivity to Internet users and JVTeam’s Registry Management Network. Redundant Internet Firewalls provide policy-based IP filtering to protect our internal building LAN from intruders and hackers.

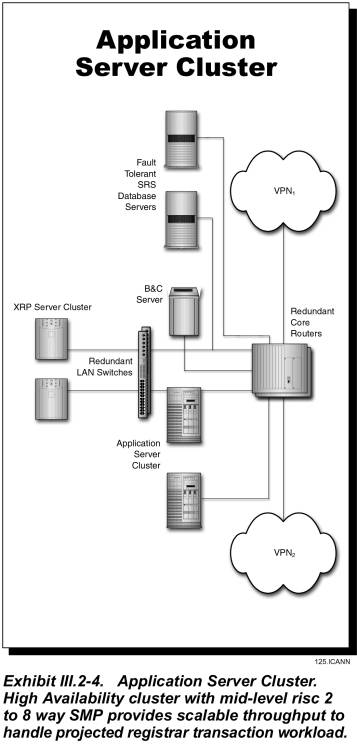

Application Server Cluster

The application server cluster is a high availability multiple computer cluster. Each computer within the cluster is a mid-level processor with its own CPU, RAID disk drives, and dual LAN connections. Processor nodes used in the clusters are RISC symmetric multiprocessor (SMP) architectures scalable in configurations from 2 to 8-way with the processing and storage capacity for very large applications. As depicted in Exhibit III.2-4, the application server cluster is designed to handle the registrar transaction workload and provides the business logic processing applications and interfaces to the authentication server, SRS database, and billing and collection system. The application server cluster is front-ended with a TCP/IP load balancer to equitably distribute the processing load across the cluster processors. The cluster manager software monitors hardware and software components, detects failures, and responds by re-allocating resources to support applications processing. The process of detecting a failure and restoring the application service is completely automatic—no operator intervention is needed.

Fault Tolerant Database Server

The database server consists of two identical Fault-tolerant RISC systems that are designed for high volume on-line transaction-processing (OLTP) database applications. Each server contains high-end RISC processors scalable in configurations from 2 to 32-way. A crossbar-based symmetric multiprocessor (SMP) memory subsystem is capable of supporting the up to 32 GB of memory needed to maintain high OLTP transaction workloads. The storage subsystem supports up to 288 GB of internal RAID storage and up to 50 TB of external RAID storage. The database management software is based on a parallel database architecture with a fault tolerant server option capable of maintaining 24 x 7 availability. The Fault-Tolerant Server supports high availability operations by implementing synchronous replication. The database enables transparent database fail-over without any changes to application code or the operating system. Clients connecting to a replicated database are automatically and transparently connected to the replicated pair of databases. The database replication feature enables maintaining geographically separated data services for multiple sites over a WAN to provide disaster recovery.

A multi-session, multi-threaded server and dual cache architecture (client/server) provides exceptionally high throughput and fast access to stored objects. A powerful database-driven publish and subscribe event notification system enables applications such as Whois or Zone Distribution to subscribe to a specific SRS database activity, for example, a domain name insert. When the domain name insert occurs, an event is generated by the database to be handled as a dynamic update to the Whois and Zone distribution servers.

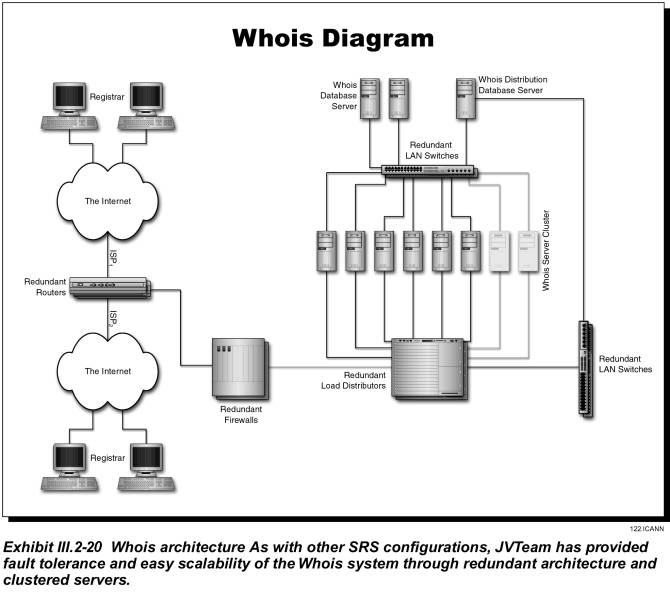

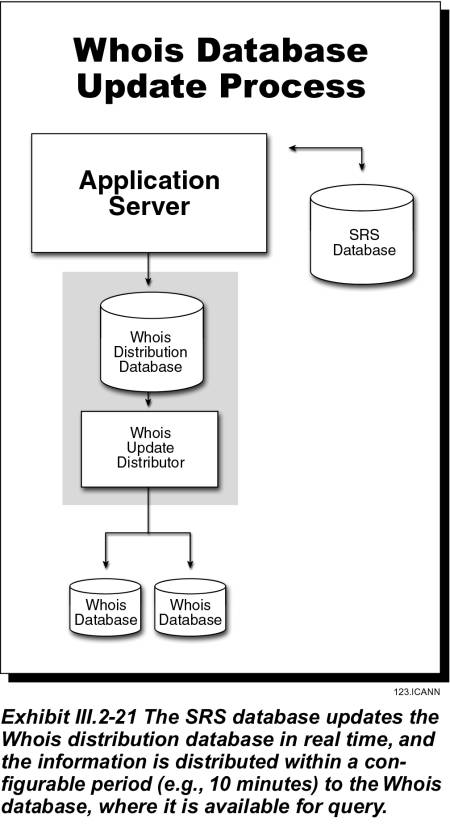

Whois Distribution Database

Certain SRS database events such as a domain name insert, domain name delete, or domain name change, generate a notification to subscriber databases such as the Whois Distribution Database. Modifications to the Whois Distribution Database are replicated to the Whois Database Clusters.

Whois Database

The Whois architecture gives the flexibility to deploy Whois database to any number of JVTeam Data Centers. In the initial phase the Whois infrastructure will be deployed to the two SRS Data Centers. However in the future, and based on load placed on the initial two Data Centers, additional infrastructure can be deployed to any of the nameserver Data Centers managed by JVTeam.

Each Whois Database receives replicated updates from the Whois Distribution Database. The initial Whois Database will consist of two mid-level RISC database servers configured in a high availability cluster with RAID storage and from 2 to 8-way SMP processors. Since data is cached in the Whois Servers, the Whois Database is hit only when a Whois Server has not cached a request in memory.

Whois Server Cluster

The Whois service is available to anyone and can receive transaction volumes in the order of one billion requests per day. The cluster is a rack mount Intel Pentium-based high availability multiple computer cluster that maintains a separate database for domain name registrations and caches commonly requested records. Processor nodes used in the Whois cluster are moderate-level Intel Pentium SMP machines scalable in configurations from 2 to 6-way SMP with local disk storage.

The Whois database contains information about Registrars, Domain names, nameservers, IP Addresses and the contacts associated with them. This is an improvement over the current registry that provides no end-user contact information. The Whois server cluster is front-ended with a load balancer designed to distribute the load equitably to the servers in the cluster and handle extremely high volumes of queries. The load balancer tracks processor availability and maintains high query processing throughput.

Zone Distribution Database

The Zone Distribution Database is dynamically updated from the SRS database using the same technique used for the Whois Distribution Database. The Zone Distribution Database is propagated to Zone Update Database at the nameserver sites using replication. This approach is far better than the current approach of TLD Zone File updates for .com, .net, and .org that occur two times per day.

Billing and Collection Server

The Billing and Collection server is a LAN-based mid-level RISC machine in configurations scalable from 2 to 8-way SMP with the processing and storage capacity for very large enterprise applications. This server runs a commercial off the shelf customer relationship management and billing and collection system that interfaces with the SRS database.

Secure Web Server Cluster

A high capacity secure Web Server cluster is provided to enable secure web services and information dissemination that is outside the scope of the XRP protocol. It contains a registry home page to enable registrars to sign in and inquire about account status, get downloads and whitepapers, access frequently asked questions, obtain self help support, or submit a trouble ticket to the TLD Registry Help Desk. The Web Server is a mid-level RISC SMP machine with local disk storage.

Authentication Server

The authentication server is a LAN-based mid-level RISC machine scalable in configurations from 2 to 8-way SMP with local RAID storage. This server runs commercial x.509 certificate based authentication services and is used to authenticate the identity of Registrars and optionally Registrants. In addition, the authentication server supports our secure Web Server portal for Registrar Customer Service functions.

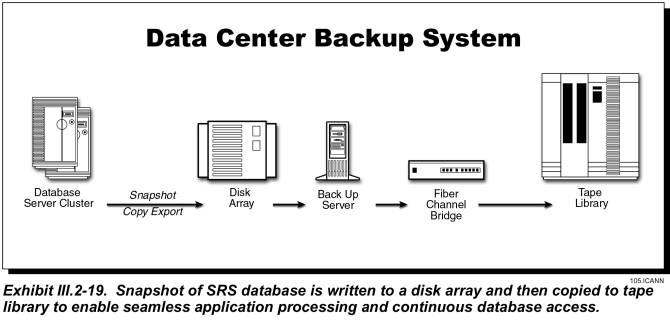

Backup Server

The backup server is an Intel Pentium-based SMP server that runs the backup and restore software to backup or restore each of the various cluster servers and database servers and provide a shared robotic tape library facility. It interfaces to the Intel server clusters and RISC server clusters over a high speed Fiber Channel bridge. It interfaces with the high-end fault tolerant database servers via a Disk Array and the Fiber Channel bridge to that interconnects to the robotic tape library. It is capable of performing remote system backup/restore of the nameservers over the VPN-based Registry Management Network.

System/Network Management Console

The system/network management console provides simple network management protocol (SNMP) monitoring of the network, LAN-based servers, cluster servers, and key enterprise applications including the XRP, Web, Whois, Zone, Billing and Collections, and database application. The server is a LAN-based moderate-level Intel Pentium-based SMP machine with local RAID disk storage and dual attach LAN interconnections.

Building LAN Backbone

The redundant switched Gigabit Ethernet building LAN backbone gives unprecedented network availability via redundant Gigabit Ethernet switches. Devices are dual attached to each of the Gigabit switch to provide a redundant LAN architecture. The building LAN is protected from the insecure Internet via a Firewall that provides IP filtering and network-based intrusion detection services to protect the system from the insecure Internet hacking and denial of service attacks.

Dual-Homed Telecommunications Access

We are using dual-homed high-speed Internet local access telecommunications links to two separate ISP providers These links will be alternately routed to provide resilience against cable faults and loss of local access telecommunications links. Similarly the telecommunications access links to our VPN provider for the Registry management network will be dual homed and alternate routed.

III.2.1.2.2 Nameserver Description

Two nameserver sites are co-located at our SRS Data Centers and the remaining nameservers are geographically dispersed with dual homed Internet and VPN local access telecommunications links to provide resilience and disaster recovery. The additional zone server clusters will be installed in Data Centers in Melbourne, Australia and London, England. The functional block diagram of our nameserver is previously depicted in Exhibit III.2-3. As can be seen from the exhibit the nameserver sites are configured to operate “lights out”. The hardware configuration is highly redundant and designed for no single point of failure and exceptionally high through put. The following are the nameserver subsystem functions:

Zone Update Database

The Zone Distribution Database at the SRS Data Center is propagated to the Zone Update Database using replication. Replication takes place over the VPN Registry Management Network. The Zone Update Database is not hit when resolving DNS queries; instead the nameservers update their in-memory database from the Zone Update Database, within defined service levels.

Nameserver Cluster

The nameserver cluster handles resolution of TLD domain names to their associated nameserver names and to the IP addresses of those nameservers. The resolution service can handle in excess of 1 billion queries per day, and our load-balanced architecture allows additional servers to be added to any nameserver cluster to allow on-demand scalability.

The nameserver Cluster is a high availability rack-mounted multiple computer cluster consisting of moderate-level Intel Pentium-based SMP machines configurable from 2 to 6-way SMP with local disk storage and dual attachment to the LAN. A TCP/IP server load balancer switch is used to distribute the load from Internet users. The server load balancer uses dynamic feedback protocol to enable servers to provide intelligent feedback to the load balancer to ensure that traffic is not routed to over-utilized servers. The load balancer supports algorithms including least connections, weighted least connections, round robin, and weighted round robin.

Building LAN Backbone

A redundant switched Ethernet building LAN backbone maintains high network availability via redundant Ethernet switches. Devices are dual attached to each of the Ethernet switches to provide a redundant LAN architecture. The building LAN is protected from the insecure Internet via a Firewall that provides IP filtering and network-based intrusion detection services to protect the system from the insecure Internet hacking and denial of service attacks.

A summary of the features and benefits of our TLD registry system architecture are provided in the following table.

|

Feature |

Benefit |

|

Three classes of scalable processor configuration – moderate-level, mid-level, and high-end |

Provides flexible

processing power and scalability to the applications |

|

Direct Access

Storage up to 50 Terabytes for database applications |

Unmatched storage

scalability of the database |

|

Switched Gigabit

Ethernet Redundant building LAN architecture |

High capacity LAN

infrastructure with no bottlenecks |

|

Full Redundancy

of all critical components with no single point of failure |

Zero downtime and

zero impact to users |

|

Dual-homed,

alternate routed local access links to two separate Internet Service

Providers |

Maintains

connectivity if one of the ISP’s services should experience and outage |

|

Dual-homed, VPN

connections to the VPN service provider |

Protects against

digging accidents that could damage local access cables |

|

Fault Tolerant parallel database architecture configured for high OLTP transaction throughput |

Non-stop database

services and throughput scaled to

handle all registry operations out of one data center. |

|

Load balancing

session distribution algorithm (SDA) to intelligently and transparently

distribute traffic across servers |

Maximize the

number of Transmission Control Protocol/Internet Protocol (TCP/IP)

connections managed by a server farm. |

|

Separate Whois

Server cluster and datamart to process Whois transactions |

Facilitates rapid

response to Whois queries. |

III.2.1.3 Registry Network System Description

JVTeam is using the Internet to provide connectivity to the Registrars and a Virtual Private Network (VPN) to provide a secure Registry Management Network for communications between the SRS data centers and the nameserver sites.

III.2.1.3.1 Internet Connectivity

JVTeam estimates the peak Internet bandwidth demand at the SRS data centers to be between 5 and 10MB. We will deploy two 45MB T-3 local access telecommunications links at each of our data centers, enabling each to provide TLD services independently of the other. We will provision 5MBs of capacity on each of the T-3 links. Therefore we will provision 10MB into each nameserver site and have up to 90MB (2 x 45MB) of capacity for growth. This should be sufficient growth for at least two years.

Connectivity to each data center will be via redundant routers. For security purposes, the router will be configured to only allow DNS UDP/TCP and BGP4 packets. Each router is connected to a load balancer that distributes the query load among the nameservers in that site’s cluster. These links will be alternately routed to provide resilience against cable faults and loss of local access telecommunications links. Similarly the telecommunications access links to our VPN provider for the Registry Management Network will be dual homed and alternate routed. Redundant routers are used for both Internet and VPN access.

III.2.1.3.2 VPN Registry Management Network

Each SRS Data Ceneter is connected to each of the nameserver sites over a VPN. In addition there are two ATM links that connect the two SRS Data Centers. Like the internet access the ATM links will be delivered over a T-3 local access link. Each link will be configured with some fraction of the full 45 MB of bandwidth. At the nameservers the two VPN connections will be delivered over a 1.5MB T-1 local access link. The bandwidth on each of the VPN circuits will be some fraction of the full 1.5MB. The VPN Registry Management Network is a secure network used for JVTeam internal registry information exchange. It handles:

· Nameserver database replication from the Zone Distribution Database to the Zone Update Database at the nameserver sites.

· Remote System/Network Management/Backup of the nameservers.

· Remote Administration of nameservers.

III.2.1.4 Registry System Application Software

Planning for the potential growth associated with domain registration and administration requires vision and a flexible design. JVTeam’s vision is to successfully conduct leading edge software engineering and product development. JVTeam’s proven record of successful development and implementation of large projects benefits ICANN by reducing technical and schedule risk.

JVTeam software components are developed using open system and software standards to facilitate cost effective application expansion and upgrade. The Registry functional design consists of a number of components representing a blend of:

· Proven, software design and development methodology

· Change management and deployment process

· Proven, mission-critical-grade, third-party software products to complement the JVTeam-built software components.

III.2.1.4.1 Registry Application Components

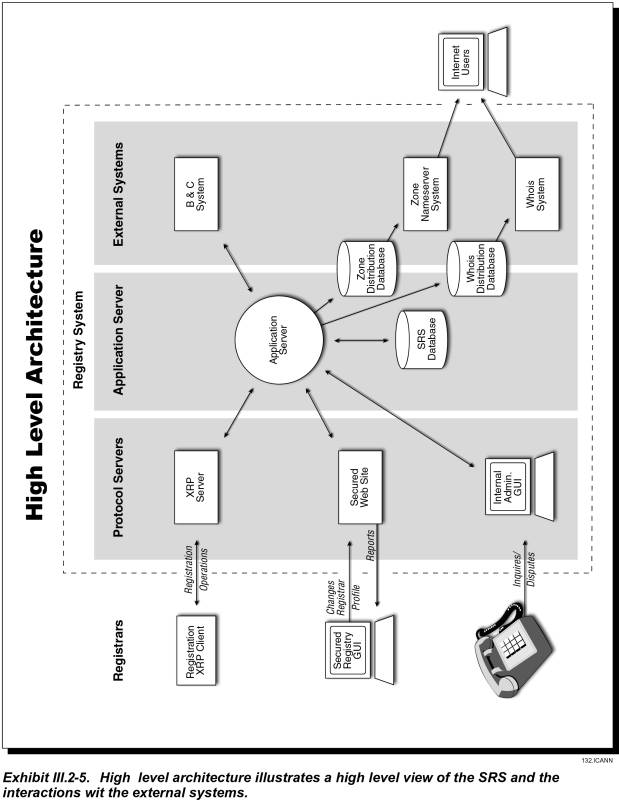

The following components, illustrated in Exhibit III.2-5, deliver the Registry application functionality:

· Protocol Adapters

· Web Server (Presentation) Component

· Application Server Component

- Process Manager

- Processing Engines

· Whois Component

· Nameserver Component

· Billing and Collections Component

· Datastore

Further information regarding these components is presented in the following

sections.

Protocol Adapter Component

The protocol adapter component is the software module running on the XRP protocol servers. This component provides the standards based interface between the Registry and Registrar systems. The XRP protocol will be based on open industry standards such as:

· XML—JVTeam proposes the introduction of a new standard protocol, the eXtensible Registry Protocol (XRP), based on XML. This protocol supports system level communication between the Registrar and the Registry.

· SSL—X.509 Certificates will be used over an encrypted SSL session to authenticate Registrars (in addition to IP based and user id/password security).

The protocol adapters will receive secure, encrypted data from Registrar systems. They will convert the verbose external XML message into a compact binary internal message format, which is delivered to the application server’s process manager for processing. When processing is complete, the process manager will send the binary internal message back to the protocol adapters for conversion to the protocol appropriate for communicating with the external system (i.e. XRP)

The protocol adaptor architecture allows JVTeam to support a simple but powerful XML based protocol supporting a comprehensive security policy, while eliminating additional load that would otherwise be placed on the core SRS system.

Application Server Component

The design of the application server component is modular and flexible to support the requirements and scale to meet demands placed on the system. The application server utilizes a stateless architecture allowing scalability simply by adding additional machines to the tier. The core business logic is built into the application server component. This component manages all back-end resources and performs services such as connection pooling and monitoring.

The process engines defined in this section are some of the major functional components of the system. Process engines will be added and configured to meet the functional requirements.

Process Manager—is used to manage the different processes supported by the application. This includes starting processes in a specific order at initialization time, monitoring the health of executing processes, restarting failed processes and starting new processes to address application load requirements. The process manager mediates processing and information requests from external systems by forwarding requests to the respective process engines.

Process Engines—will perform the underlying processing steps or primitives that are involved in performing the operation. The Process Engines receive data and parameters from other application components, including Process Manager. The Process Engines access data from databases, and update the databases while processing a transaction. The primary process engines are:

· Domain Name Administration

· Registrar Administration

· Whois Administration

· Zone Administration

· Security

· Billing and Grace period Administration

· Logging, Auditing & Reporting

The functionality of the primary process engines are explained in detail in sections III.2.3 and III.2.6.

Datastore

The SRS architecture includes a fault-tolerant database supporting high availability operations by implementing synchronous replication. This enables transparent database fail-over without any changes to application code or the operating system. Clients connecting to a replicated database are automatically and transparently connected to the replicated pair of databases.

The architecture utilizes a powerful database-driven publish and subscribe event notification system enabling components such as Whois or Zone Distribution to subscribe to specific SRS events. Subscribed events cause dynamic updates to the Whois and Zone distribution servers.

Please refer to section III.2.3 for a detailed description of the Database capabilities.

Registry Web Interface

The guiding principles for the design of the proposed Registry Web Interface are flexibility and security. The Registry web interface will be accessible over the Internet, using a client web browser and will be served up by the Registry web server clusters at the SRS Data Centers. The secure web servers provide front-end HTTPS (secure web) protocol handling with client browsers accessible over the Internet.

Some of the key features of the Registry Web Interface Architecture include:

· Extensible Design

· Open, non-proprietary, standards-based technology (HTTP + SSL).

· Intuitive user interface

· Secure access

· On-line help

· Ease of navigation

· Data entry type checking (before forwarding requests to the application server tier)

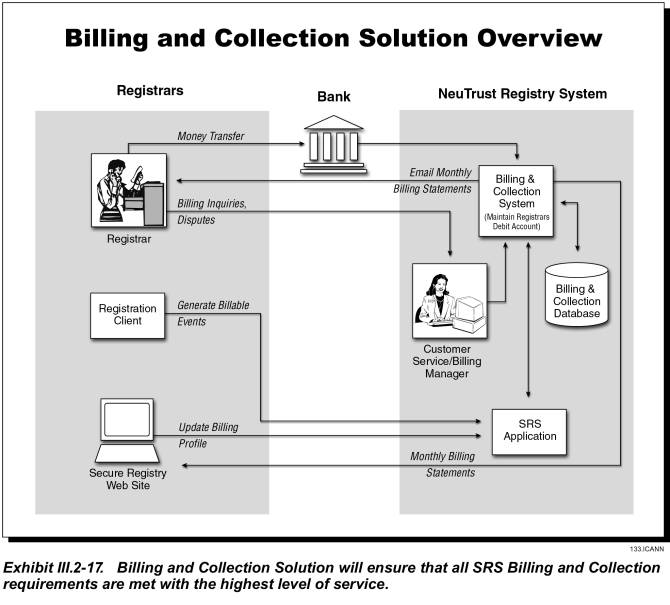

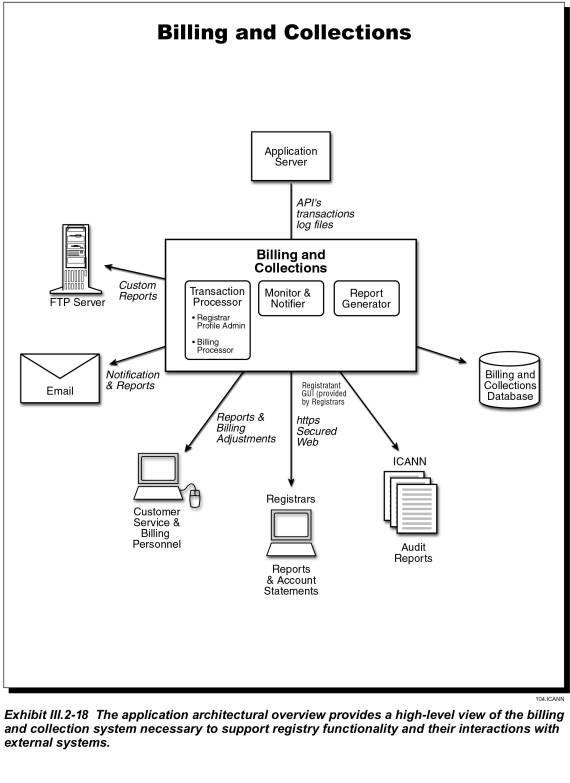

Billing and Collection System

JVTeam will combine our customized B&C methodology that has proven successful in the past with an off-the shelf, accounts receivable product to provide comprehensive, secured, high quality, scalable, and web accessible B&C service. The major components of the system will include

· Database

· Transaction Processor

· Monitor & Notifier

· Report Generator

Please refer to section III.2.6 for a detailed description of the Billing and Collection system along with the interfaces, security and access privileges.

Nameserver Component

Zone related modifications to the SRS Database cause equivalent changes to the subscribing Zone Distribution Database. Updates to the Zone Distribution Database are replicated out to the Zone Update Databases at each nameserver Data Center. Machines in the nameserver Cluster reconcile their in-memory database with the Zone Update Database at regular intervals defined in the service level agreement. The entire Zone is held memory resident.

Section III.2.5 explains nameserver architecture is detail, along with the process, software and advantages.

Whois Component

Whois related modifications to the SRS Database cause equivalent changes to the subscribing Whois Distribution Database. Updates to the Distribution Database are replicated to the Whois Database Cluster at each SRS Data Center. Machines in the Whois Server Cluster cache common requests in-memory, taking load off the Whois Database Cluster. Cached items expire after a defined time interval to ensure Whois data can be guaranteed correct within defined service levels.

Please refer to section III.2.8 for a detailed description of the Whois capabilities.

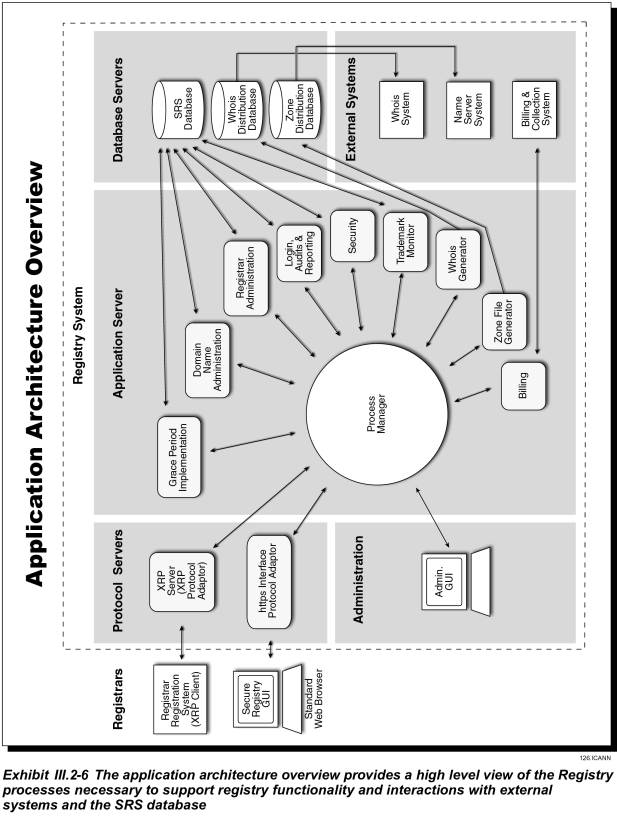

Exhibit III.2-6 provides a more detailed application architecture overview.

III.2.1.4.2 Registry Software Development Methodology

The quick time to market and software technologies required to design and implement the registry software applications dictate software development methodologies that minimize software development and reduce development time without sacrificing software quality. The JVTeam technical personnel are experts in software applications development of registry and clearing house protocols and software applications used in Internet domain names and phone numbering systems. The benefit to ICANN is software products that meet the functional requirements and operate reliably.

Based on our experience, JVTeam is using Rapid Application Development (RAD) methodology and Computer Aided Software Engineering (CASE) tools for registry software applications development. RAD methodology enables large applications systems to be developed and tested incrementally in planned releases consisting of alpha, beta, and full production versions. We have found that incremental development of software applications is a key success factor in fielding feature rich software applications that meet the business needs. This is because each incremental build provides a testable software product that can be demonstrated to users and stakeholders. Changes can be easily incorporated in the next build cycle and each successive build provides increased functionality until the full production release is completed, tested, and accepted.

RAD Methodology

In the RAD methodology there are five phases.

1. Business Analysis—Focus group and Joint Application Design sessions are used to document the system requirements, business process flows. business logic, and system data requirements.

2. System Design—Software specifications are developed using object oriented analysis and object oriented design CASE tools and logical data models are developed using entity relationship diagram data modeling. Meta data is developed for each data entity.

3. Architecture Design—the system hardware and software architecture is designed and documented. Then hardware and software systems specifications and configurations are developed and finalized for acquisition.

4. Implementation—the applications software is developed for the target hardware platforms and operating system environment using object oriented programming languages, database development tools, and fourth generation languages. Development test beds are built for software testing. The applications software is built and tested in increments with the functionality growing with each build from alpha to beta and to full production. The system hardware and software is installed in the planned data centers for rollout and acceptance of the applications software production release. The Carnegie Mellon University Software Engineering Institute Software Capability Maturity Model (SW-CMM) best practices are used for project management, requirements management, software configuration control, and software quality assurance.

5. Growth and Maintenance—during this phase the applications software is successively upgraded in planned build and release cycles. Software incident reports are addressed in each build and release. Maintenance releases are developed for serious software problems that cannot wait until a planned upgrade release.

Development Tools and Languages

JVTeam is using object-oriented analysis and object-oriented design CASE tools for requirements analysis and detailed software design. We use object oriented programming, database development tools, and fourth generation programming languages for software development. The following table gives examples of tools JVTeam has used in the past and will use on the Registry project.

|

Development Tool/Language |

Purpose |

|

CASE Tools |

JVTeam will utilize CASE tools such as Oracle CASE and Rational Rose. These tools provide full feature object oriented analysis and design. |

|

Java, C++, Delphi, SQL |

JVTeam has prior experience with, and will utilize these development languages where appropriate to implement all business logic. |

|

CORBA, RMI |

JVTeam has prior experience with, and will utilize these Remote object protocols. |

|

Java Servlets,

Java Server Pages, Cold Fusion, CGI-script, XML & XSL |

JVTeam has prior experience with, and will utilize these web development technologies for building web sites and thin client applications for distribution to a wide range of users. |

III.2.2 Registry-Registrar Model and Protocol (RFP Section D15.2.2)

This section describes existing numerous problems with the current Registry-Registrar model and RRP protocol, and provides JVTeam’s proposed methods for resolving these problems.

The current registry/registrar model and protocol is a “thin” (limited amount of data) registry serving a fat (more data) registrar. JVTeam proposes moving to a “fat registry” model, with contact and authentication details stored centrally at the Registry. Under this model, the business relationships would be unchanged: registrants would still deal with the registrar, and the registrars with the registry. The value of the proposed change is its ability to solve many of the problems currently experienced on a daily basis by both Registrants and Registrars.

As part of its fat-registry proposal, JVTeam proposes to introduce a new protocol, the eXtensible Registry Protocol (XRP), which will overcome the limitations of the current RRP protocol (RFC2832). The XRP protocol will accommodate both thin and fat registry models. We do not anticipate introducing the XRP protocol until after the “land rush” period has ended.

III.2.2.1 Problems With The Current Model and its Supporting Protocol (RRP)

The current TLD is based on a thin registry model and the RRP protocol. Problems with the system cause confusion for registrants and have added costs (and risk) to registrars because of the need for manual processing or the breakdown of automated processes. The following table lists issues with the current model and RRP and gives example relating to these issues:

|

Deficiencies of the current registry/registrar model and protocol |

|

|

Issue |

Result |

|

Protocol not extensible |

· No ability to authenticate registrants · Not designed for fat registry model · Not designed using a naturally extensible technology, such as XML |

|

Protocol not complete |

· Not all data exposed (e.g., registrar details and status not exposed) · No ability to query transfer status · No date/time of registration and expiration · No status on domains linked to another registrar |

|

Different protocols used for Whois |

· No uniform Whois standard (registrars can use web interface) · Not all registrars use Whois on Port 43 |

|

No standard Whois fields |

· Each registrar has implemented Whois differently. Differences include: · Some registrars have additional registrant contact information · No standards exist for technical and/or zone contact · Some registrars have one address line; others, two lines · Some registrars don’t expose all fields in the Whois |

|

Different data formatting in Whois services |

· Data is presented differently; i.e., Phone: 99999 or Phone Number: (999) 999 · Different ordering of fields · Different lengths of fields |

|

No real-time update of Whois and zone file |

· Registry updates Whois and root servers only every 12 hours · Causes confusion for Registrants, adds support cost to Registrars |

|

Timing inconsistencies (when adding, deleting, transferring registrar, etc) |

· Registry Whois server updated before or after Registrar database, causing inconsistency between the two · Two registrars’ databases can be updated at different times, causing inconsistency |

|

No machine-readable Whois format |

· No automated parsing of Whois data by non-registrar community (Need XML-based Whois format) |

|

No registrar extensibility |

· No provisions for registrars to add custom fields to registry database except after revising the protocol |

|

No ability to broadcast events to registrars |

· Registry cannot automatically notify Registrars of important events (e.g., “Transfer of Registrar” request or renaming of a name server host name); must use email · Cannot accommodate registrars’ need to add ‘listeners’ to certain important events |

|

No registrant authentication |

· Cannot determine whether a registrant’s “Transfer of Registrar” request is authentic. The registrar must make a subjective decision about whether the registrant is the one represented in the losing Registrar’s Whois · No standard method for authenticating deletions, changes of ownership, re-delegations, name-server maintenance, etc · TLD security sinks lowest common (registrar) denominator, because a registrar with poor security could perform an incorrect Transfer of Registrar, giving the registrant control of the wrong domain name. Potential for “hijacked” domain names creates huge stability problems to the Internet. |

|

No rollback support for some operations |

· Not all operations can be reversed by a separate counteraction (although some can: e.g., “Register domain name” can be reversed by “Cancel domain name” command within 5 days) · Operations like Registrar Transfer cannot be ‘rolled-back’ via the protocol in the case of error |

|

No support for IPv6 |

· Does not support important, currently deployed technology |

III.2.2.2 Features of the Fat Registry Model

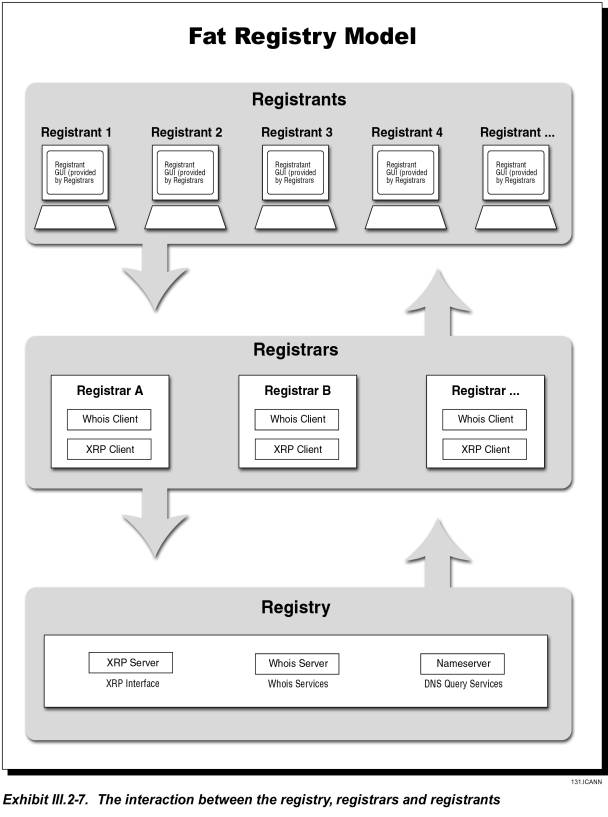

As the beginning of this proposal paragraph (III.2.2) states, JVTeam proposes deploying a “fat registry” model, with contact and authentication details stored centrally at the Registry. Exhibit III.2-7 illustrates the fat registry model.

JVTeam prepared the following list of design features for our proposed

XRP protocol:

· Extensible protocol based on XML

· Support for both fat and thin registry models

· Support for centralized contact information / centralized Whois

· Standardized Whois service (same fields regardless of registrar’s web site)

· Machine readable Whois format (when specified)

· Extensible data-field support (registrars can add custom fields to Whois following standardized fields)

· Functionally complete (exposing all registry data via one interface)

· Secure

· Non-repudiation (No deniability)

· Fault tolerant (Duplicate requests have no adverse effect)

· Real-time XRP functions (check, register, etc)

· Real-time DNS and Whois updates

· Support for IPv6 IP addresses

· Standard, centralized registrant-authentication method

· Extensible registrant-authentication methods (support for digital certificates, etc)

· Simple account transfer (between registrars, using centralized authentication)

· Event broadcasting (ability for registrars to place ‘listeners’ on registry events)

· Rollback support (i.e., rollback registrar transfer; not necessarily transactional).

JVTeam firmly believes that the industry needs a new extensible protocol that addresses all of the above points, and that the selected protocol should become the industry standard. JVTeam’s position is that there is infinite room to innovate in many other aspects of domain-registry systems, but competition at the protocol level merely fragments the domain-name-registration industry. Conversely, the industry will gain significantly in efficiency if it adopts a standard protocol that addresses the listed requirements, particularly in supporting both fat and thin Registry models.

JVTeam’s proposed XRP protocol addresses each of the above points. We will present a draft XRP to the IETF as the basis for an industry standard in Q4 2000, and will invite comments and suggestions from registrars, registries, and other concerned individuals and organizations. Rather than holding XRP as proprietary, we will undertake all reasonable measures to obtain consensus on making the proposed protocol an open industry standard.

III.2.2.3 Benefits of Proposed XRP Solution

JVTeam’s proposed XRP Solution and fat-registry model will preserve the current relationships that are familiar to both registrants and registrars. Simultaneously, they will solve the many problems with the current RRP-based model that is raising costs for registrars and distressing registrants. Nonetheless, despite the fat-registry model’s obvious advantages, we are willing to consider alternatives.

On the one hand it is theoretically possible retain the current thin-registry model and place more stringent technical requirements on registrars (while closely policing compliance). On the other hand, JVTeam believes that a more practical solution—the only solution that introduces true stability and domain security into the market—is moving to a fat-registry model with a new XML-based protocol that supports the many enhancements previously listed. The XRP protocol—indeed, any new protocol—must be designed to fix all the problems with the current model and protocol.

To facilitate the transit in 2001 for current registrars, JVTeam will provide an open-source version of the registrar toolkit. This enhanced toolkit will simplify the migration efforts of registrars that currently use the RRP toolkit only.

JVTeam is well qualified to take a lead position in the process of developing and standardizing the specification for a new protocol. In preparing our proposal for building a modern, extensible protocol, we relied heavily on the extensive prior experience that Melbourne IT brought to JVTeam. Research groups at Melbourne IT have been using XML for more than two years, and have developed two XML-based domain-name-registration interfaces. Further, the company currently has XML-based interfaces in production.

III.2.3 Database Capabilities (RFP Section D15.2.3)

JVTeam will provide an enterprise-strength, fault-tolerant database system capable of managing large databases and high transaction-processing loads reliably, with scalable growth to accommodate change. The database system supports asynchronous replication of data between two co-active SRS data centers geographically dispersed. The benefit to the Internet community is reliable, stable operations and scalable transaction-processing throughput to accommodate Internet growth.

The heart of the SRS is its database systems, which provide not only simple data storage-and-retrieval capabilities, but also the following capabilities:

· Persistence—storage and random retrieval of data

· Concurrency—ability to support multiple users simultaneously

· Distribution (data replication)—maintenance of relationships across multiple databases

· Integrity—methods to ensure data is not lost or corrupted (e.g., automatic two-phase commit, physical and logical log files, roll-forward recovery)

· Availability—support for 24 x 7 x 365 operations (requires redundancy, fault tolerance, on-line maintenance, etc.)

· Scalability—unimpaired performance as the number of users, workload volume, or database size increases.

As applications architectures such as SRS become increasingly dependent on distributed client/server communications and processing, system designers must carefully plan where the bulk of the data processing occurs: on the database server, applications server, or client. Our final design will distribute the processing workload in a way that maximizes scalability and minimizes down time.

This proposal paragraph (III.2.3) is divided into three major subsections:

III.2.3.1 Functional Overview—describes the characteristics of the three primary SRS databases (i.e., size, throughput, and scalability); database procedures and functions for object creation, editing, and deleting; change notifications; transfer procedures; grace-period functions; and reporting.

III.2.3.2 Database System Description—describes the database-system components, server platforms, and scalability for the three primary databases.

III.2.3.3 Security and Access Privileges—describe the access controls for granting and denying users and administrators access to the databases.

III.2.3.1 Functional Overview

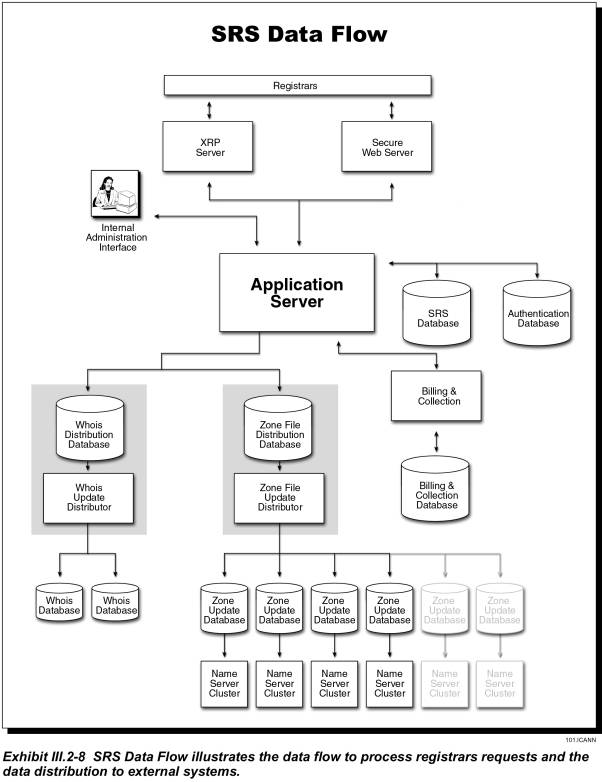

As shown in Exhibit III.2-8 in Proposal Paragraph III.2.1, JVTeam’s registry will include three major databases:

· SRS Database—This database’s primary function is to provide highly reliable persistent storage for all of the registry information required to provide domain-registration services. The SRS database is highly secured, with access limited to authenticated registrars, trusted application-server processes, and the registry’s database administrators.

· Billing and Collection Database—This database will provide the information required for JVTeam to render billing and collection (B&C) services to the registrars. Access to its data is limited to the trusted B&C system processes and to registry database administrators. Registrars can view billing data through a secure Web portal with a B&C Applications Programmer Interface (API).

· Whois Database—The Whois database is a searchable database that any Internet user can access to view details of the domain name stored in the SRS. The Whois database maintains data about registrars, domain names, nameservers, IP addresses, and the associated TLD contacts. The Whois database is updated from the SRS database through an intermediate database and replication process.

In addition to these databases, the registry will maintain various internal databases to support various operations, e.g., authorizing login userids and passwords, authenticating digital certificates, and maintaining access-control lists.

In implementing the SRS database systems, our system designers will carefully analyze the differing requirements for the three major databases and select the optimum solution for each. Design techniques and considerations will include:

· Multiple logical data models that we will optimize for the different types of information that each system needs to serve registrars efficiently

· Content that will include data related not only to domain names and domain name registration, but also to registrars, registrants, nameservers, Whois servers, and the Billing and Collection system

· Differing volumes of database transactions and database sizes

· Differing business needs

· Differing performance and availability requirements

· Replication of databases to achieve high availability and facilitate backup/recovery.

Database Size, Throughput, and

Scalability

The following table lists design parameters for the initial design of the three major databases. The parameters are based on projected volumes in the first two (2) years. Scalability term in the table refers to the database’s ultimate capacity expressed as a multiple of the initial design capacity in terms of size and transaction processing power.

DATABASE DESIGN PARAMETERS |

||

SRS Database |

Design Parameter |

|

|

Domain registrations |

20 million |

|

|

Registrars |

100 |

|

|

Size of registration object |

10 K |

|

|

Total data |

190 G |

|

|

Database Management System (DBMS) and logs |

3 G |

|

|

Database indexing |

400 G |

|

|

Total size |

590 G |

|

|

Database throughput |

TpsC = 1050 |

|

|

Database scalability |

100 times in size 8 times in processing power |

|

|

Billing Database |

Design Parameter |

|

|

Billable events per month |

1 million |

|

|

Transaction size |

1 K |

|

|

Transactions per month |

1 G |

|

|

Historical data for 3 months |

3 G |

|

|

Registrars |

100 |

|

|

Registrar billing profile |

30 K |

|

|

Total billing-data |

3 M |

|

|

Total data |

3 G |

|

|

DBMS & logs |

3 G |

|

|

Database indexing |

6 G |

|

|

Total size |

12 G |

|

|

Database throughput |

TpsC = 353 |

|

|

Database scalability |

From 2-way to 8-way |

|

|

Whois Database |

Design Parameter |

|

|

Domain registrations |

20 million |

|

|

Registrars |

100 |

|

|

Size of registration object |

4 K |

|

|

Total data |

80 G |

|

|

DBMS & logs |

2 G |

|

|

Database indexing |

200 G |

|

|

Total size |

280 G |

|

|

Database throughput |

TpsC = 353 |

|

|

Database scalability |

2-way to 8-way |

|

Database Procedures and Functions

The database system is critical to the processing of SRS business transactions. The SRS database and B&C databases are accessed during many registrar/registry transactions. If a transaction is completed successfully, the system not only updates these two databases but also the Whois distribution and Zone distribution databases. (The latter two are periodically replicated to the Whois and Zone update databases, respectively.) This subsection describes the main procedures, objects, and data flows for the following registry functions:

· Object Creation—Domain name and nameserver registration

· Object Editing—Modifying domain name or nameserver data and creating or modifying associations

· Object Deletion—Domain name cancellations

· Object Existence and Information Query—Obtain information on domain name, nameserver, or contact name

· Object Transfer—Transfer a domain name to a different registrar

· Automatic Domain Renewal—Extend a domain name registration for a year

· Grace Period Implementation—Allow various time periods before actions become final

· Registrar Administration—Add, delete, or change to a registrar account or billing profile

· Billing Notifications—Account-related information sent to registrars and designated registry staff

· Reporting—Account and billing information that can be viewed on line or emailed

· Mass Updates—Special procedures; e.g., changing a registrar’s name on each of its domain name files if it is acquired by another registrar

· Trademark Monitor—A trademark registration, search, and notification capability.

The following paragraphs provide additional information about each of these functions.

Object Creation (Register a Domain Name or Name Server)

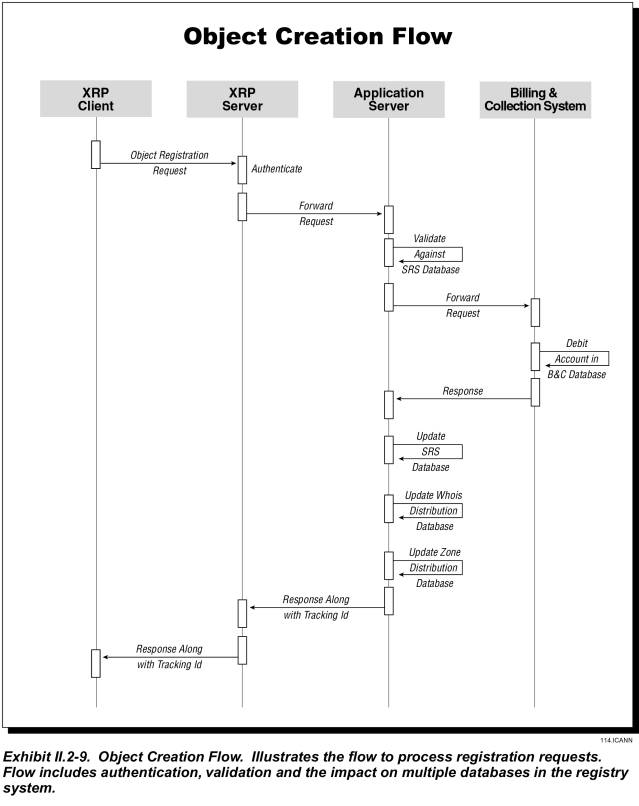

Exhibit III.2-9 shows how a registrar registers either a new domain name or a nameserver.

· The registrar’s request arrives at the application server via the XRP server.

· The application server queries the SRS database to determine whether the domain name or nameserver is available. If it is not, the application server notifies the registrar.

· If valid, the application server queries the Billing database to determine whether sufficient funds are available in the registrars account. If not, the application server notifies the registrar.

· If funds are adequate, the registrar’s account is debited for the appropriate amount and the master database is updated to reflect the registration.

The process for registering a nameserver eliminates the billing steps. The application server queries the SRS database to determine whether the nameserver name is available. If it is, the application server updates the server with the new information; if it is not, the application server returns the error code to the registrar.

Object Editing

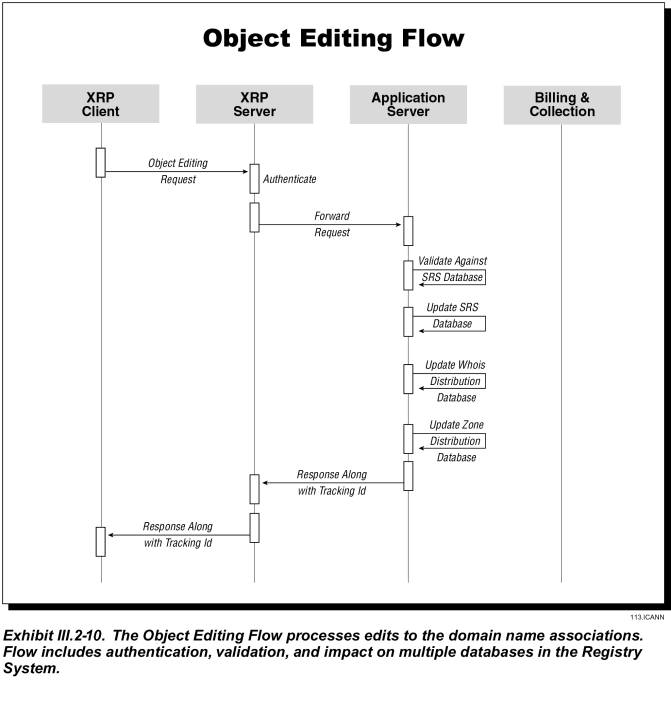

Exhibit III.2-10 shows how a registrar modifies and creates associations for an existing domain name.

· The registrar’s request arrives at the application server via the XRP server

· The application server queries the SRS database to validate the domain name and nameserver status

· If valid, the application server updates the SRS database; if not, it returns the error code to the registrar.

Object Deletion

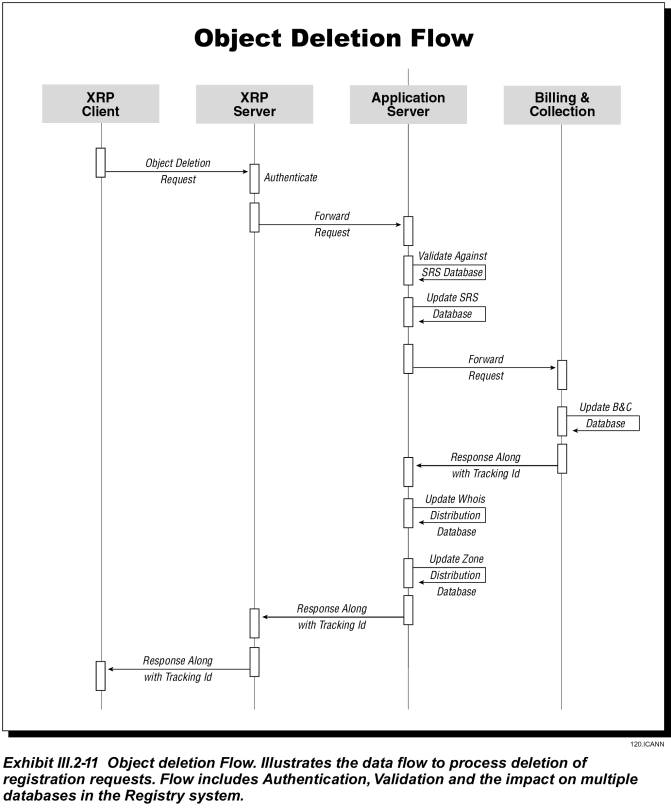

Exhibit III.2-11 shows how a registrar cancels a domain name or a nameserver registration.

· The registrar’s request arrives at the application server via the XRP server

· The application server queries the SRS database to validate the status of the domain name, its associations or to determine that no domain names are associated with the nameserver to be cancelled

· If valid, the application server updates the SRS and Billing databases; if not, it returns the error code to the registrar.

Object Existence &

Information Query

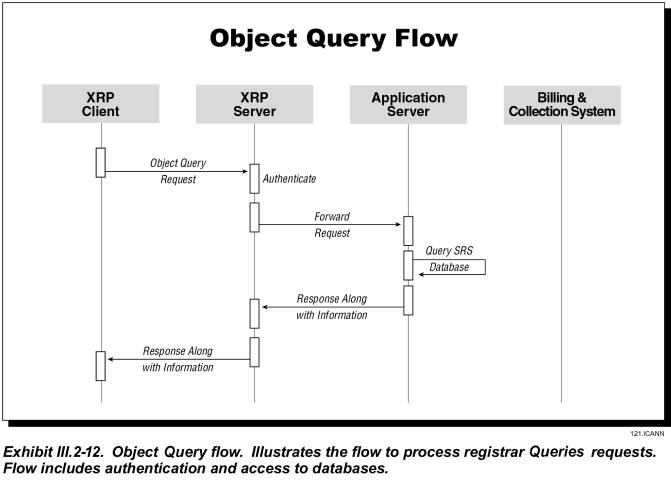

Exhibit III.2-12 shows how the system handles a query about a domain name, nameserver, or contact identifier.

· For an “Object Existence” query, the application server searches the SRS database and returns a positive or negative response

· For an “Object Information” query, the application server returns all information available for the requested object.

Registrar Transfer (of Domain Name)

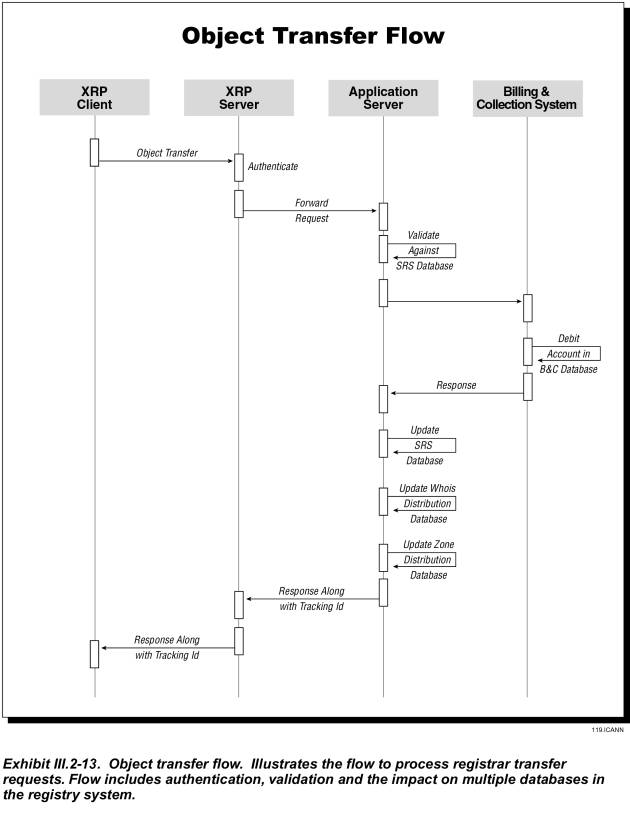

Exhibit III.2-13 shows how a registrar can transfer a domain name registration from another registrar to himself.

· The registrar’s request arrives at the application server via the XRP server.

· The application server queries the SRS database to validate the domain name status.

· If valid, the application server notifies the losing registrar of the request and initiates the grace period to wait for losing registrar’s response.

· If the losing registrar does not respond within the grace period, or returns a positive acknowledgement, the application server queries the Billing database to determine whether the new registrar’s account has sufficient funds for the transaction. If not, the applications server sends the new registrar an error code.

· If funds are adequate, the registrar’s account is debited for the appropriate amount and the master database is updated to reflect the new registrar.

Domain Renewal (Automatic)

If a domain name registration expires, the SRS automatically renews the domain name for one year. The application server performs this function as follows:

· It queries the SRS database to validate the status of the domain name. If no longer valid, process terminates.

· If valid, the application server queries the Billing database to verify that the registrar’s account contains sufficient funds. If not, it returns an error message to the registrar.

· If sufficient funds, registrar’s account is charged for one-year renewal and status is updated in SRS database.

Domain Renewal (Registrar-requested)

· A registrar’s request to renew a domain name arrives at the application server via the XRP server.

· The application server queries the SRS database to validate the domain name status. If not, it returns an error message to the registrar.

· If valid, the application server queries the Billing database to verify that the registrar’s account contains sufficient funds. If not, it returns an error message to the registrar.

· If sufficient funds, registrar’s account is charged for term of renewal and status is updated in SRS database.

Grace Period Implementation

JVTeam’s SRS will support grace periods for several registry functions. The SRS database will manage the configurable data for implementing grace periods for such as the following grace periods:

· Automatic one-year renewal or extension of a domain name registration after it expires

· Grace period during which a registrar can cancel an automatic renewal

· Grace period during which a domain name remains unavailable after its registration has expired or been cancelled

· Grace period during which a registrar can cancel a domain name registration without any fee

· Grace period for waiting for losing registrar’s response before transferring a domain name registration to a new registrar.

Registrar Administration

Registrar administration refers to adding or deleting registrars, and to providing each registrar with secure access to the system and to that registrar’s data. The SRS database manages most registrar data; the Billing database contains the B&C profile and contacts. Any “Add/Delete Registrar” or “Change Registrar Profile” request will generate the appropriate changes in the SRS and Billing databases.

Mass Updates

A typical mass update is a global change of a registrar’s name, which may occur when one registrar purchases another. JVTeam will design procedures for mass database changes initiated by registrars or other authorized entities.

Trademark Monitor

End users will be able to obtain trademark registration through registrars—and after agreeing to a “Terms of Use” policy—end users can access a trademark-search capability with the following characteristics:

· Trademark holders will be able to submit to their registrars a string of characters that they wish to protect as a trademark.

· Registrars will submit this string to the registry and request that it be monitored for trademark infringement.

· The registry will insert the string into a “Trademark monitoring” file in the SRS database.

· When the registry receives a “Request for domain-name registration” that includes the monitored string, it returns a notice that the string is a trademark, provides the contact information for the monitoring trademark holder, and informs the applying registrar of the grace period during which he may revoke the domain-name registration without penalty. The registry then proceeds with registering the domain name.

· Registrars have the responsibility for alerting registrants if a domain name they have registered contains a sub-string being monitored by a trademark holder.

Billing Notifications

JVTeam’s Billing system will monitor the registrars’ accounts for insufficient funds. If it determines that the balance is below the required minimum, it notifies the registrar and the registry’s customer-service personnel.

Reporting Capabilities

To support a detailed, usage-based accounting and billing structure, the system generates a substantial amount of highly detailed resource-accounting data. The following sources generate this data:

· Billing database

· Billing transaction-processing subsystem

· Batch-processing events (e.g., audits and reports)

· Internal-support records.

Monthly Account Statements—We will send a detailed monthly transaction statement to each registrar via email. The statement will include the following:

· Account Summary, including payments received, balance forward, and credits and adjustments

· Detailed list of all fee-incurring charges; e.g., new registrations, registration renewals, registrar transfers.

Online Billing Reports—The system will generate a variety of reports for internal and external users.

· Using the Internet, registrars will be able to access their account statements and detailed transaction reports, but not those of other registrars.

· Registrars will be able to request custom reports. The system will generate these reports in a batch process, and will store them in the FTP directory for the requesting registrar to download.

Audit Reports—JVTeam will create audit reports for internal and external purposes. Audit reports will include:

· Policy implementation